Understanding the Link Between Body Movement and Visual Perception

The study of human visual perception through egocentric views is crucial in developing intelligent systems capable of understanding & interacting with their environment. This area emphasizes how movements of the human body—ranging from locomotion to arm manipulation—shape what is seen from a first-person perspective. Understanding this relationship is essential for enabling machines and robots to plan and act with a human-like sense of visual anticipation, particularly in real-world scenarios where visibility is dynamically influenced by physical motion.

Challenges in Modeling Physically Grounded Perception

A major hurdle in this domain arises from the challenge of teaching systems how body actions affect perception. Actions such as turning or bending change what is visible in subtle and often delayed ways. Capturing this requires more than simply predicting what comes next in a video—it involves linking physical movements to the resulting changes in visual input. Without the ability to interpret and simulate these changes, embodied agents struggle to plan or interact effectively in dynamic environments.

Limitations of Prior Models and the Need for Physical Grounding

Until now, tools designed to predict video from human actions have been limited in scope. Models have often used low-dimensional input, such as velocity or head direction, and overlooked the complexity of whole-body motion. These simplified approaches overlook the fine-grained control and coordination required to simulate human actions accurately. Even in video generation models, body motion has usually been treated as the output rather than the driver of prediction. This lack of physical grounding has restricted the usefulness of these models for real-world planning.

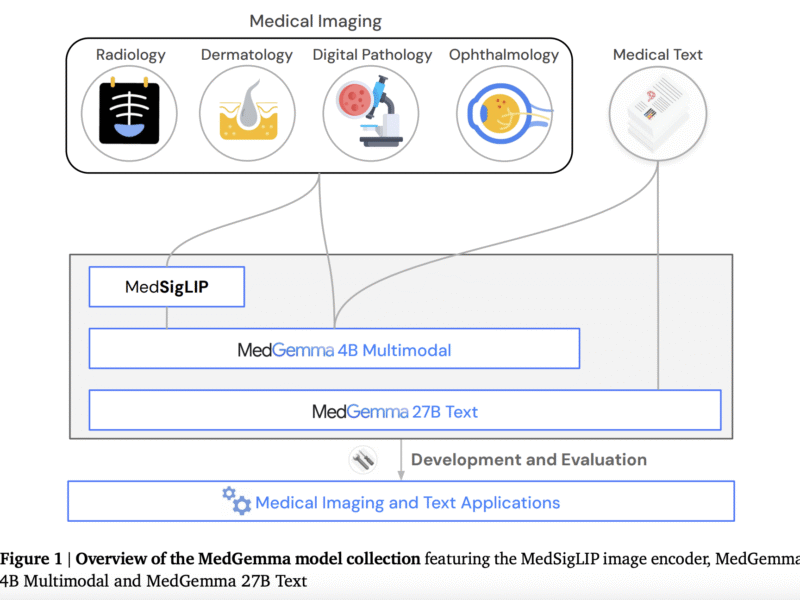

Introducing PEVA: Predicting Egocentric Video from Action

Researchers from UC Berkeley, Meta’s FAIR, and New York University introduced a new framework called PEVA to overcome these limitations. The model predicts future egocentric video frames based on structured full-body motion data, derived from 3D body pose trajectories. PEVA aims to demonstrate how entire-body movements influence what a person sees, thereby grounding the connection between action and perception. The researchers employed a conditional diffusion transformer to learn this mapping and trained it using Nymeria, a large dataset comprising real-world egocentric videos synchronized with full-body motion capture.

Structured Action Representation and Model Architecture

The foundation of PEVA lies in its ability to represent actions in a highly structured manner. Each action input is a 48-dimensional vector that includes the root translation and joint-level rotations across 15 upper body joints in 3D space. This vector is normalized and transformed into a local coordinate frame centered at the pelvis to remove any positional bias. By utilizing this comprehensive representation of body dynamics, the model captures the continuous and nuanced nature of real motion. PEVA is designed as an autoregressive diffusion model that uses a video encoder to convert frames into latent state representations and predicts subsequent frames based on prior states and body actions. To support long-term video generation, the system introduces random time-skips during training, allowing it to learn from both immediate and delayed visual consequences of motion.

Performance Evaluation and Results

In terms of performance, PEVA was evaluated on several metrics that test both short-term and long-term video prediction capabilities. The model was able to generate visually consistent and semantically accurate video frames over extended periods of time. For short-term predictions, evaluated at 2-second intervals, it achieved lower LPIPS scores and higher DreamSim consistency compared to baselines, indicating superior perceptual quality. The system also decomposed human movement into atomic actions such as arm movements and body rotations to assess fine-grained control. Furthermore, the model was tested on extended rollouts of up to 16 seconds, successfully simulating delayed outcomes while maintaining sequence coherence. These experiments confirmed that incorporating full-body control led to substantial improvements in video realism and controllability.

Conclusion: Toward Physically Grounded Embodied Intelligence

This research highlights a significant advancement in predicting future egocentric video by grounding the model in physical human movement. The problem of linking whole-body action to visual outcomes is addressed with a technically robust method that uses structured pose representations and diffusion-based learning. The solution introduced by the team offers a promising direction for embodied AI systems that require accurate, physically grounded foresight.

Check out the Paper here. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter, and Youtube and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.