Today, C‑Gen.AI came out of stealth mode to introduce an infrastructure platform engineered that the company said addresses a problem undermining AI’s potential: the inefficiency and rigidity of current AI infrastructure stacks.

“Priced out by spiraling cloud costs, plagued by low GPU utilization, and hamstrung by vendor lock‑in, AI teams have been limited by inefficient infrastructure, now empowered by the C-Gen.AI GPU orchestration platform,” the company said.

C‑Gen.AI was founded by Sami Kama, company CEO and a veteran technologist whose career spans CERN, NVIDIA, and AWS, where he led critical innovations in AI performance optimization, distributed training, and global technology deployments. His expertise, from silicon to cloud-scale systems, forms the foundation of the C-Gen.AI’s mission to eliminate structural inefficiencies that inhibit AI infrastructure. Capitalized with $3.5m in venture-backed funding from leading infrastructure and AI-centered investors, C‑Gen.AI emerges from stealth to challenge the status quo and accelerate AI readiness across startups, data centers, and global enterprises.

According to Gartner, worldwide spending on generative AI is forecast to reach $644 billion in 2025, up from $124 billion in 2023, as organizations accelerate investment across infrastructure, tools, and services. But with this growth comes risk. Gartner also warns that many AI projects will stall or fail due to cost overruns, complexity, and mounting technical debt. This growing gap between AI ambition and operational readiness highlights the need for infrastructure that can scale intelligently, adapt quickly, and avoid locking teams into brittle, expensive stacks.

“We’re operating in a system built for yesterday’s workloads, not today’s AI,” said Sami Kama, CEO of C‑Gen.AI. “GPU investments sit idle, deployments drag on, and costs balloon. We emerged from stealth because the infrastructure layer is where most AI projects quietly break down. It’s not just about access to GPUs. It’s about the inability to deploy fast enough, the waste that happens between workloads, and the rigidity that locks teams into environments they can’t afford to scale.”

“If we want enterprise AI to deliver real results, we must fix the foundation it runs on. That’s the value proposition G-Gen.AI delivers, it’s AI without pain, without waste, at scale.”

Three markets, one platform

- AI Startups – Struggling with high cloud bills, slow provisioning, and an inability to monetize models quickly, startups need infrastructure that adapts and scales, without spending cycles rebuilding stacks.

- Data Center Operators – Many data centers struggle to compete with the “big three” cloud providers, as customers prefer their familiar, fully managed AI services. C‑Gen.AI solves this by managing all AI workload complexities regardless of whether these are hosted in a hyperscaler or a remote data center, eliminating vendor lock-in and enabling data centers to monetize idle GPU time through inference cycles. This unlocks new revenue and helps smaller data centers deliver competitive AI solutions and become AI foundries.

- Enterprises – Facing compliance, security, and performance pressures, enterprises demand private AI environments that scale without creating siloed toolchains or risk exposure.

“This isn’t about ripping out existing investments, it’s about making them work harder and deriving the value that has been sitting locked behind inefficient systems and underutilized infrastructure,” added Kama. “Our platform lets GPU operators monetize unused capacity and gives end users flexibility without locking them in.”

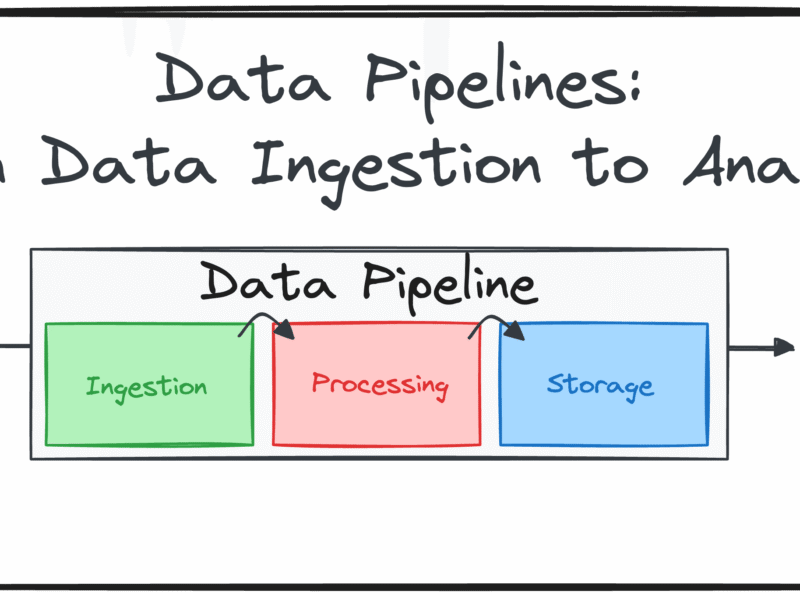

C‑Gen.AI is a powerful software layer atop existing GPU infrastructure that turns an organization’s GPU instances into AI supercomputers, whether public, private, or hybrid. Featuring automated cluster deployment, real‑time scaling, and GPU reuse across training and inference, the platform addresses performance and operational issues head-on by aligning infrastructure with the unique requirements of AI workloads. As these workloads become more unpredictable and/or compute-intensive, C‑Gen.AI tunes and optimizes infrastructure to meet changing requirements. As a result, users benefit from faster AI deployments with a lower total cost of ownership.