Today, OpenAI released GPT-5, their most advanced language model to date, and you can start using it immediately on Databricks.

GPT-5 unlocks new frontiers in reasoning, multimodal understanding, and task execution. To help you realize its full potential from day one, Databricks provides the foundation enterprises need: secure access, built-in compliance, and cost governance at scale.

That’s where Mosaic AI Gateway comes in. It’s the centralized control plane for AI that lets you adopt new models like GPT-5 with confidence. AI Gateway unifies access, monitoring, and security for all AI usage, whether you’re calling GPT-5, gpt-oss, Meta’s Llama, AWS Bedrock models, or other models.. To date, thousands of customers have leveraged our routing and observability features to take LLMs to production.

How to use GPT-5 on Databricks

Use GPT-5 with confidence, right from your Databricks environment. With AI Gateway, use GPT-5 out of the box, there’s no need to re-architect or build custom integrations.

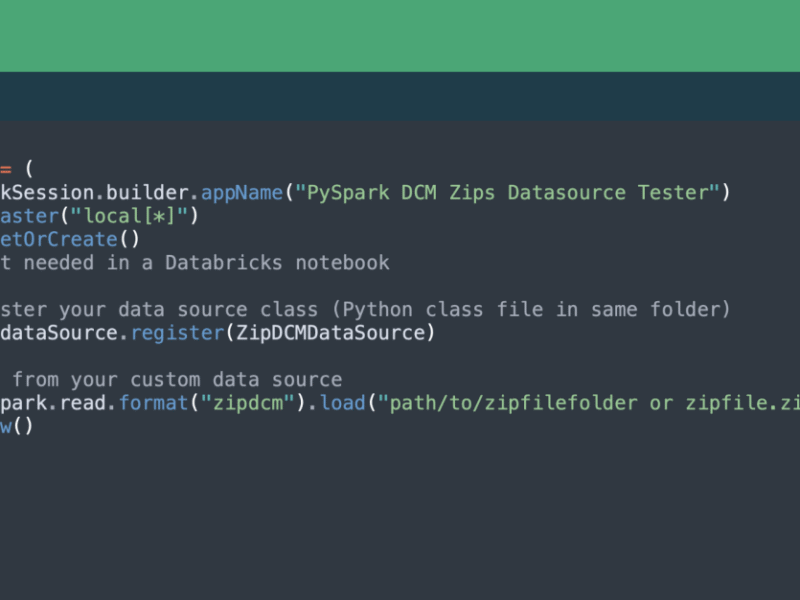

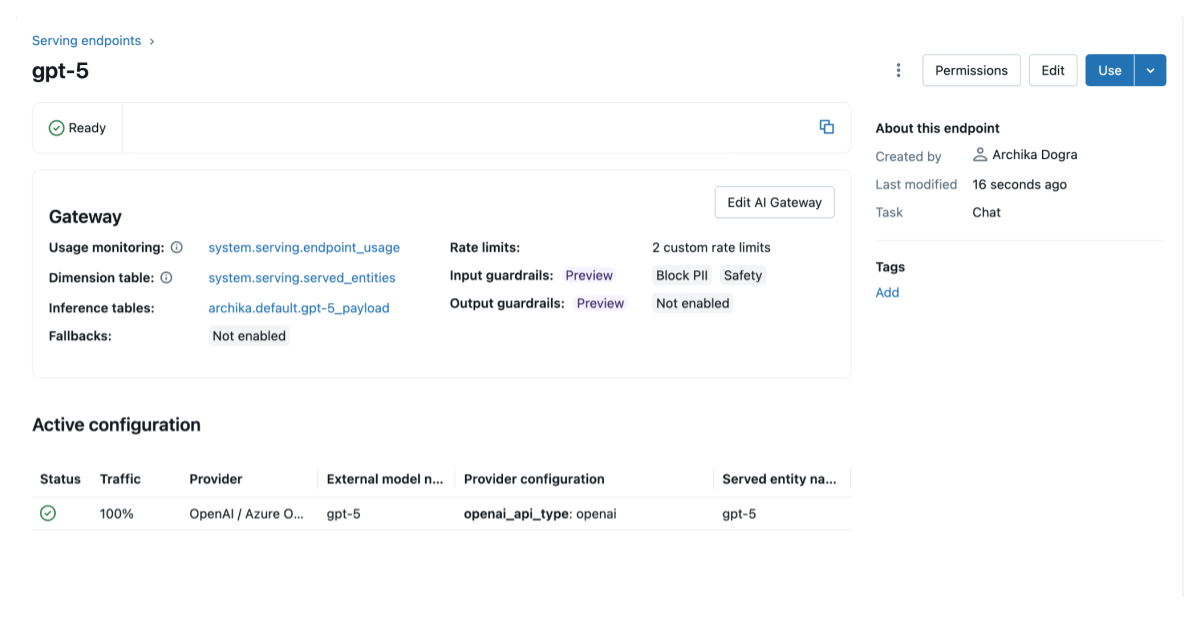

Get started with GPT-5 on Databricks in just a few steps. AI Gateway supports external models like GPT-5 out of the box, no complex integration required. Simply create a model serving endpoint using the OpenAI or Azure OpenAI provider, manage your API keys via Databricks Secrets, and share access securely with the endpoint using the standard OpenAI SDK or REST API.

This lets you run GPT-5 directly from your Databricks environment, governed, observable, and production-ready from day one.

Centralized governance without slowing innovation

As AI adoption scales, platform teams need proactive governance that doesn’t bottleneck developers. AI Gateway delivers centralized controls that work across any AI model and provider:

- Secure access controls ensure only authorized teams reach specific models.

- User-defined rate limiting allocates throughput and controls costs based on business and use case priorities.

- Built-in guardrails detect PII and apply safety filters consistently, regardless of which LLM provider you’re using.

The result: teams get secure, scalable access to cutting-edge models like GPT-5 without compromising on governance.

Built-in routing for production reliability

As teams operationalize new, powerful models like GPT-5 and gpt-oss, reliability becomes critical. AI Gateway includes robust traffic management to ensure your applications stay online—even when model providers hit rate limits or outages:

- Load balancing distributes requests across providers and regions.

- Automated fallbacks maintain service continuity when individual providers experience issues.

Whether you’re routing requests to GPT-5, gpt-oss, or another model, AI Gateway ensures production-grade uptime and resilience across models.

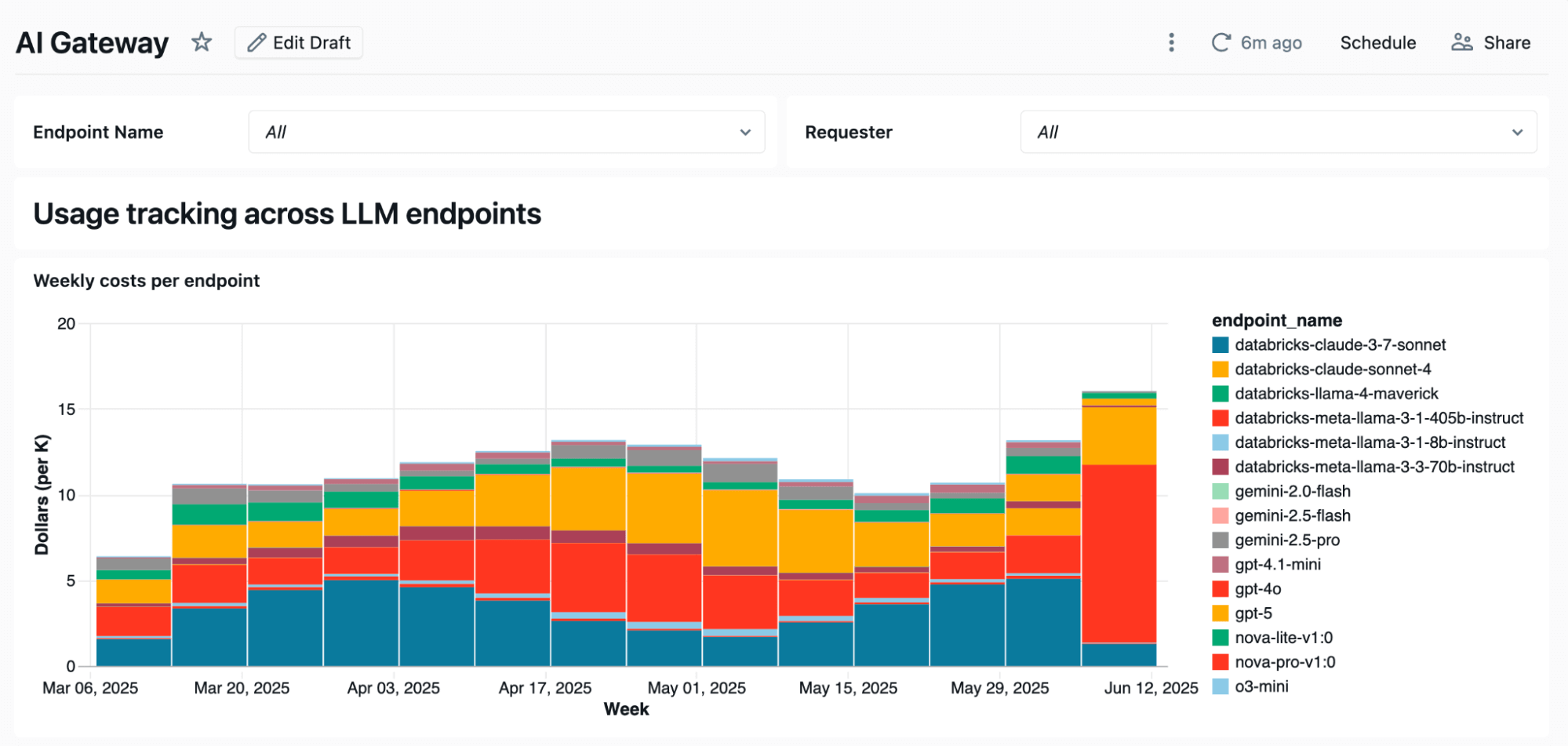

Usage, compliance, and quality monitoring at scale

You can’t govern what you can’t see. AI Gateway captures detailed telemetry—token counts, users, model versions—in centralized Unity Catalog tables, giving you a clear view of usage and costs across teams.

Our powerful inference tables log full request and response payloads automatically, enabling prompt debugging, quality evaluation, and dataset curation for finetuning. This observability fuels continuous improvement, without building custom monitoring pipelines.

Get started with GPT-5 and gpt-oss on Databricks

Start using OpenAI’s latest models — with full observability, governance, and production reliability built in. Securely share access today to GPT-5 so that your developers can test it out within AI Playground and easily apply it to their data with SQL functions.