I in the Mostly AI Prize and won both the FLAT and SEQUENTIAL data challenges. The competition was a fantastic learning experience, and in this post, I want to provide some insights into my winning solution.

The Competition

The goal of the competition was to generate a synthetic dataset with the same statistical properties as a source dataset, without copying the data.

The competition was split into two independent challenges:

- FLAT Data Challenge: Generate 100,000 records with 80 columns.

- SEQUENTIAL Data Challenge: Generate 20,000 sequences (groups) of records.

To measure the quality of the synthetic data, the competition used an Overall Accuracy metric. This score measures the similarity between the synthetic and source distributions for single columns (univariates), pairs of columns (bivariates), and triples of columns (trivariates) using the L1 distance. Additionally, privacy metrics like DCR (Distance to Closest Record) and NNDR (Nearest Neighbor Distance Ratio) were used to ensure submissions weren’t just overfitting or copying the training data.

Solution Design

Initially, my goal was to create an ensemble of multiple different state-of-the-art models and combine their generated data. I experimented a lot with different models, but the results did not improve as much as I had hoped.

I pivoted my approach and focused on post-processing. First, I trained a single generative model from the Mostly AI SDK, and instead of generating the required number of samples for the submission, I oversampled to create a large pool of candidate samples. From this pool, I then selected the final output in a way that matches the statistical properties of the source dataset much more closely.

This approach led to a substantial jump in the leaderboard score. For the FLAT data challenge, the raw synthetic data from the model scored around 0.96, but after post-processing, the score jumped to 0.992. I used a modified version of this approach for the SEQUENTIAL data challenge, which yielded a similar improvement.

My final pipeline for the FLAT challenge consisted of three main steps:

- Iterative Proportional Fitting (IPF) to select an oversized, high-quality subset.

- Greedy Trimming to reduce the subset to the target size by removing the worst-fitting samples.

- Iterative Refinement to polish the final dataset by swapping samples for better fitting ones.

Step 1: Iterative Proportional Fitting (IPF)

The first step in my post-processing pipeline was to get a strong initial subset from the oversampled pool (2.5 million generated rows). For this, I used Iterative Proportional Fitting (IPF).

IPF is a classical statistical algorithm used to adjust a sample distribution to match a known set of marginals. In this case, I wanted the synthetic data’s bivariate (2-column) distributions to match those of the original data. I also tested uni- and trivariate distributions, but I found that focusing on the bivariate relationships yielded the best performance while being computationally fast.

Here’s how it worked:

- I identified the 5,000 most correlated column pairs in the training data using mutual information. These are the most important relationships to preserve.

- IPF then calculated fractional weights for each of the 2.5 million synthetic rows. The weights were adjusted iteratively so that the weighted sums of the bivariate distributions in the synthetic pool matched the target distributions from the training data.

- Finally, I used an expectation-rounding approach to convert these fractional weights into an integer count of how many times each row should be selected. This resulted in an oversized subset of 125,000 rows (1.25x the required size) that already had very strong bivariate accuracy.

The IPF step provided a high-quality starting point for the next phase.

Step 2: Trimming

Generating an oversized subset of 125,000 rows from IPF was a deliberate choice that enabled this additional trimming step to remove samples that didn’t fit well.

I used a greedy approach that iteratively calculates the “error contribution” of each row in the current subset. The rows that contribute the most to the statistical distance from the target distribution are identified and removed. This process repeats until only 100,000 rows remain, ensuring that the worst 25,000 rows are discarded.

Step 3: Refinement (Swapping)

The final step was an iterative refinement process to swap rows from the subset with better rows from the much larger, unused data pool (the remaining 2.4 million rows).

In each iteration, the algorithm:

- Identifies the worst rows within the current 100k subset (those contributing most to the L1 error).

- Searches for the best replacement candidates from the outside pool that would reduce the L1 error if swapped in.

- Performs the swap if it results in a better overall score.

As the accuracy of the synthetic sample is already quite high, the additional gain from this process is rather small.

Adapting for the Sequential Challenge

The SEQUENTIAL challenge required a similar approach, but with two changes. First, a sample consists of several rows, connected by the group ID. Secondly, the competition metric adds a measure for coherence. This means not only do the statistical distributions need to match, but the sequences of events also need to be similar to the source dataset.

My post-processing pipeline was adapted to handle groups and also optimize for coherence:

- Coherence-Based Pre-selection: Before optimizing for statistical accuracy, I ran a specialized refinement step. This algorithm iteratively swapped entire groups (sequences) to specifically match the coherence metrics of the original data, such as the distribution of “unique categories per sequence” and “sequences per category”. This ensured that we continued the post-processing with a sound sequential structure.

- Refinement (Swapping): The 20,000 groups selected for coherence then went through the same statistical refinement process as the flat data. The algorithm swapped entire groups with better ones from the pool to minimize the L1 error of the uni-, bi-, and trivariate distributions. A secret ingredient was to include the “Sequence Length” as a feature, so the group lengths are also considered in the swapping.

This two-stage approach ensured the final dataset was strong in both statistical accuracy and sequential coherence. Interestingly, the IPF-based approach that worked so well for the flat data was less effective for the sequential challenge. Therefore, I removed it to focus computing time on the coherence and swapping algorithms, which yielded better results.

Making It Fast: Key Optimizations

The post-processing strategy by itself was computationally expensive, and making it run within the competition time limit was a challenge in itself. To succeed, I relied on a few key optimizations.

First, I reduced the data types wherever possible to handle the massive sample data pool without running out of memory. Changing the numerical type of a large matrix from 64-bit to 32 or 16-bit greatly reduces the memory footprint.

Secondly, when changing the data type was not enough, I used sparse matrices from SciPy. This technique allowed me to store the statistical contributions of each sample in an incredibly memory-efficient way.

Lastly, the core refinement loop involved a lot of specialized calculations, some of which were very slow with numpy. To overcome this, I used numba. By extracting the bottlenecks in my code into specialized functions with the @numba.njit decorator, Numba automatically translated them into highly optimized machine code that runs at speeds comparable to C.

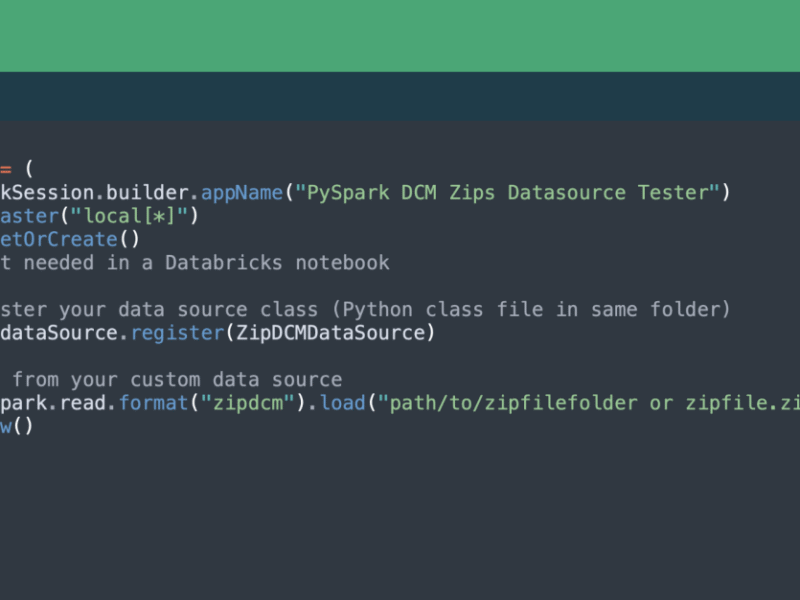

Here is an example of how I needed to speed up the summation of rows in sparse matrices, which was a major bottleneck in the original NumPy version.

import numpy as np

import numba

# This can make the logic run hundreds of times faster.

@numba.njit

def _rows_sum_csr_int32(data, indices, indptr, rows, K):

"""

Sum CSR rows into a dense 1-D vector without creating

intermediate scipy / numpy objects.

"""

out = np.zeros(K, dtype=np.int32)

for r in rows:

start = indptr[r]

end = indptr[r + 1]

for p in range(start, end):

out[indices[p]] += data[p]

return outHowever, Numba is not a silver bullet; it’s helpful for numerical, loop-heavy code, but for most calculations, it is faster and easier to stick to vectorized NumPy operations. I advise you to only try it when a NumPy approach does not reach the required speed.

Final Thoughts

Even though ML models are getting increasingly stronger, I think that for most problems that Data Scientists are trying to solve, the secret ingredient is often not in the model. Of course, a strong model is an integral part of a solution, but the pre- and postprocessing are equally important. For these challenges, a post-processing pipeline targeted specifically for the evaluation metric led me to the winning solution, without any additional ML.

I learned a lot in this challenge, and I want to thank Mostly AI and the jury for their great job in organizing this fantastic competition.

My code and solutions for both challenges are open-source and can be found here: