AI project to succeed, mastering expectation management comes first.

When working with AI projets, uncertainty isn’t just a side effect, it can make or break the entire initiative.

Most people impacted by AI projects don’t fully understand how AI works, or that errors are not only inevitable but actually a natural and important part of the process. If you’ve been involved in AI projects before, you’ve probably seen how things can go wrong fast when expectations aren’t clearly set with stakeholders.

In this post, I’ll share practical tips to help you manage expectations and keep your next AI project on track, specially in projects in the B2B (business-to-business) space.

(Rarely) promise performance

When you don’t yet know the data, the environment, or even the project’s exact goal, promising performance upfront is a perfect way to ensure failure.

You’ll likely miss the mark, or worse, incentivised to use questionable statistical tricks to make the results look better than they are.

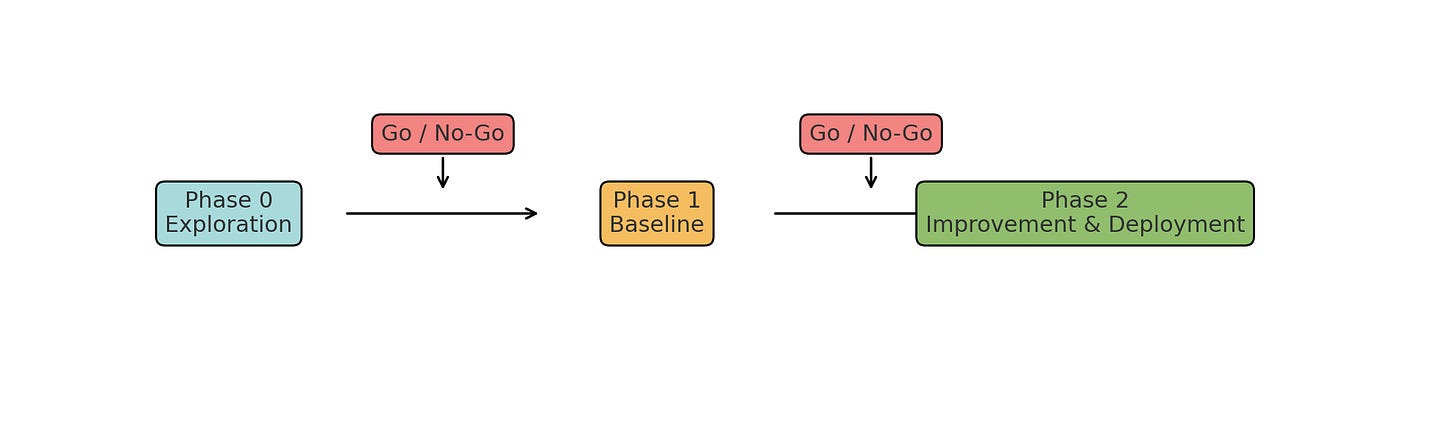

A better approach is to discuss performance expectations only after you’ve seen the data and explored the problem in depth. At DareData, one of our key practices is adding a “Phase 0” to projects. This early stage allows us to explore possible directions, assess feasibility, and establish a potential baseline, all before the customer formally approves the project.

The only time I recommend committing to a performance target from the start is when:

- You have complete confidence in, and deep knowledge of, the existing data.

- You’ve solved the exact same problem successfully many times before.

Map Stakeholders

Another essential step is identifying who will be interested in your project from the very start. Do you have multiple stakeholders? Are they a mix of business and technical profiles?

Each group will have different priorities, perspectives, and measures of success. Your job is to ensure you deliver value that matters to all of them.

This is where stakeholder mapping becomes essential. You need to identify understanding their goals, concerns, and expectations. And you most tailor your communication and decision-making throughout the project in the different dimnsions.

Business stakeholders might care most about ROI and operational impact, while technical stakeholders will focus on data quality, infrastructure, and scalability. If either side feels their needs aren’t being addressed, you are going to have a hard time shipping your product or solution.

One example from my career was a project where a customer needed an integration with a product-scanning app. From the start, this integration wasn’t guaranteed, and we had no idea how easy it would be to implement. We decided to bring the app’s developers into the conversation early. That’s when we learned they were about to launch the exact feature we planned to build, only two weeks later. This saved the customer a lot of time and money, and spared the team from the frustration of creating something that would never be used.

Communicate AI’s Probabilistic Nature Early

AI is probabilistic by nature, a fundamental difference from traditional software engineering. In most cases, stakeholders aren’t accustomed to working in this kind of uncertainty. To help, humans aren’t naturally good at thinking in probabilities unless we’ve been trained for it (which is why lotteries still sell so well).

That’s why it’s essential to communicate the probabilistic nature of AI projects from the very start. If stakeholders expect deterministic, 100% consistent results, they’ll quickly lose trust when reality doesn’t match that vision.

Today, this is easier to illustrate than ever. Generative AI offers clear, relatable examples: even when you give the exact same input, the output is rarely identical. Use demonstrations early and communicate this from the first meeting. Don’t assume that stakeholders understand how AI works.

Set Phased Milestones

Set phased milestones from the start. From day one, define clear checkpoints in the project where stakeholders can assess progress and make a go/no-go decision. This not only builds confidence but also ensures that expectations are aligned throughout the process.

For each milestone, establish a consistent communication routine with reports, summary emails, or short steering meetings. The goal is to keep everyone informed about progress, risks, and next steps.

Remember: stakeholders would rather hear bad news early than be left in the dark.

Steer away from Technical Metrics to Business Impact

Technical metrics alone rarely tell the full story when it comes to what matters most: business impact.

Take accuracy, for example. If your model scores 60%, is that good or bad? On paper, it might look poor. But what if every true positive generates significant savings for the organization, and false positives have little or no cost? Suddenly, that same 60% starts looking very attractive.

Business stakeholders often overemphasize technical metrics as it’s easier for them to grasp, which can lead to misguided perceptions of success or failure. In reality, communicating the business value is far more powerful and easier to grasp.

Whenever possible, focus your reporting on business impact and leave the technical metrics to the data science team.

An example from one project we’ve done at my company: we built an algorithm to detect equipment failures. Every correctly identified failure saved the company over €500 per factory piece. However, each false positive stopped the production line for more than two minutes, costing around €300 on average. Because the cost of a false positive was significant, we focused on optimizing for precision rather than pushing accuracy or recall higher. This way, we avoided unnecessary stoppages while still capturing the most valuable failures.

Business stakeholders often overemphasize technical metrics because they’re easier to grasp, which can lead to misguided perceptions of success or failure.

Showcase Scenarios of Interpretability

More accurate models are not always more interpretable, and that’s a trade-off stakeholders need to understand from day one.

Often, the techniques that give us the highest performance (like complex ensemble methods or deep learning) are also the ones that make it hardest to explain why a specific prediction was made. Simpler models, on the other hand, may be easier to interpret but can sacrifice accuracy.

This trade-off is not inherently good or bad, it’s a decision that should be made in the context of the project’s goals. For example:

- In highly regulated industries (finance, healthcare), interpretability might be more valuable than squeezing out the last few points of accuracy.

- In other industries, such as when marketing a product, a performance boost could bring such significant business gains that reduced interpretability is an acceptable compromise.

Don’t shy away from raising this early. You need to know that everyone agrees on the balance between accuracy and transparency before you commit to a path.

Think about Deployment from Day 1

AI models are built to be deployed. From the very start, you should design and develop them with deployment in mind.

The ultimate goal isn’t just to create an impressive model in a lab, it’s to make sure it works reliably in the real world, at scale, and integrated into the organization’s workflows.

Ask yourself: What’s the use of the “best” AI model in the world if it can’t be deployed, scaled, or maintained? Without deployment, your project is just an expensive proof of concept with no lasting impact.

Consider deployment requirements early (infrastructure, data pipelines, monitoring, retraining processes) and you ensure your AI solution will be usable, maintainable, and impactful. Your stakeholders will thank you.

(Bonus) In GenAI, don’t shy away from speaking about the cost

Solving a problem with Generative AI (GenAI) can deliver higher accuracy, but it often comes at a cost.

To achieve the level of performance many business users imagine, such as the experience of ChatGPT, you may need to:

- Call a large language model (LLM) multiple times in a single workflow.

- Implement Agentic AI architectures, where the system uses multiple steps and reasoning chains to reach a better answer.

- Use more expensive, higher-capacity LLMs that significantly increase your cost per request.

This means performance in GenAI projects isn’t just about performance, it’s always a balance between quality, speed, scalability, and cost.

When I speak with stakeholders about GenAI performance, I always bring cost into the conversation early. Business users often assume that the high performance they see in consumer-facing tools like ChatGPT will translate directly into their own use case. In reality, those results are achieved with models and configurations that may be prohibitively expensive to run at scale in a production environment (and only possible for multi-billion dollar companies).

The key is setting realistic expectations:

- If the business is willing to pay for the top-tier performance, great

- If cost constraints are strict, you may need to optimize for a “good enough” solution that balances performance with affordability.

Those are my tips for setting expectations in AI projects, especially in the B2B space, where stakeholders often come in with strong assumptions.

What about you? Do you have tips or lessons learned to add? Share them in the comments!