Picture this: your data science team finally cracks it. The model hits 95% accuracy, the charts look beautiful, and the demos run flawlessly. You high-five across Zoom, Slack buzzes with excitement, and leadership is ready to pop champagne.

Fast forward three months. Users are frustrated, churn is rising, support tickets are piling up. What happened?

This story plays out far too often in SaaS and AI startups. The hard truth? Your AI model isn’t your product. It’s just one component in a much bigger system — and unless you get user experience, data flows, and edge cases right, that shiny AI model won’t translate into product success.

The AI Illusion: Model Accuracy vs. Real-World Impact

It’s tempting to fall in love with model performance metrics. Precision, recall, F1 scores — they feel concrete, measurable, and impressive. But real-world product success doesn’t live inside a Jupyter Notebook.

Think about it: customers don’t care about your model’s 95% accuracy. They care about smooth experiences, fast answers, and trustworthy outcomes. A beautifully performing model that sits behind a clunky interface, misfires on rare but critical cases, or lags due to data bottlenecks will fail the product test — every time.

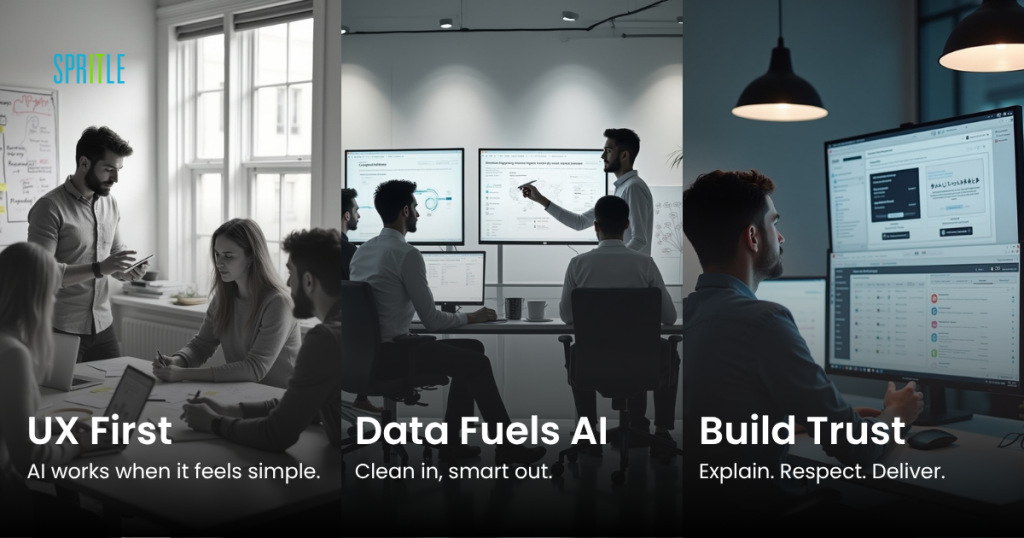

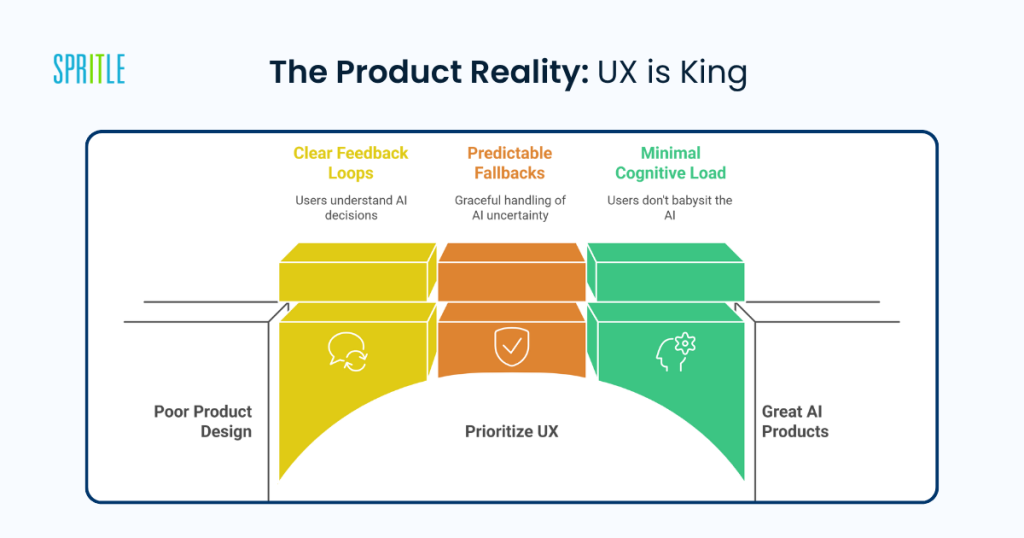

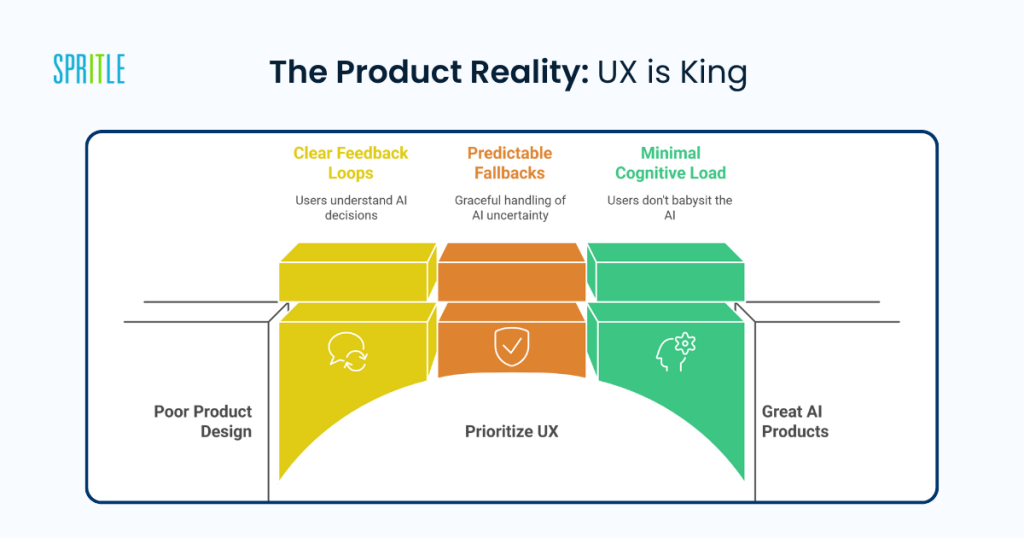

The Product Reality: UX is King

AI can add incredible power, but it cannot compensate for poor product design. A confusing workflow, ambiguous error messages, or unpredictable behavior will drive users away, regardless of how smart your model is.

Great AI products prioritize:

- Clear feedback loops (so users understand why AI made a decision)

- Predictable, graceful fallbacks (especially when AI is uncertain)

- Minimal cognitive load (users shouldn’t feel like they need to babysit your AI)

If AI is the engine, UX is the steering wheel, brakes, and dashboard. Without it, you’re just asking users to ride in a Ferrari with no controls.

Data Pipelining: The Unseen Backbone

Most AI failures in production happen not because the model is wrong, but because the data is stale, incomplete, or poorly contextualized.

SaaS teams often underestimate:

- How difficult it is to build real-time, clean data pipelines

- The pain of handling missing data or inconsistent formats

- The need for constant monitoring and refreshing of input streams

AI is only as good as the data flowing into it. A weak data pipeline turns great models into garbage-output machines. Getting data pipelining right is usually more challenging and more important than squeezing out another 2% model improvement.

The Edge Case Trap: Small Failures, Big Consequences

Edge cases are where trust gets broken. Users don’t remember when your AI works well 99 times — they remember the one time it failed in a critical situation.

In sectors like healthcare, aviation, and finance, edge cases aren’t rare curiosities — they’re mission-critical. Building robust AI products means:

- Proactively identifying and testing edge cases

- Designing transparent failure modes (e.g., “I’m not sure” responses)

- Giving users control to override or audit AI decisions

Ignoring edge cases is like building a house with beautiful walls but a cracked foundation.

A Real-World Example: Conversational AI Gone Wrong

We’ve all experienced chatbots that get stuck in loops, voice assistants that misunderstand basic commands, or predictive systems that confidently offer useless results.

Why? Because the AI model worked in isolation, but real-world product use was messy:

- Users asked unpredictable questions

- Accents or dialects caused transcription errors

- Backend systems failed silently

Winning AI products design for messiness: handling ambiguity, adapting to user behavior, and offering simple recovery paths.

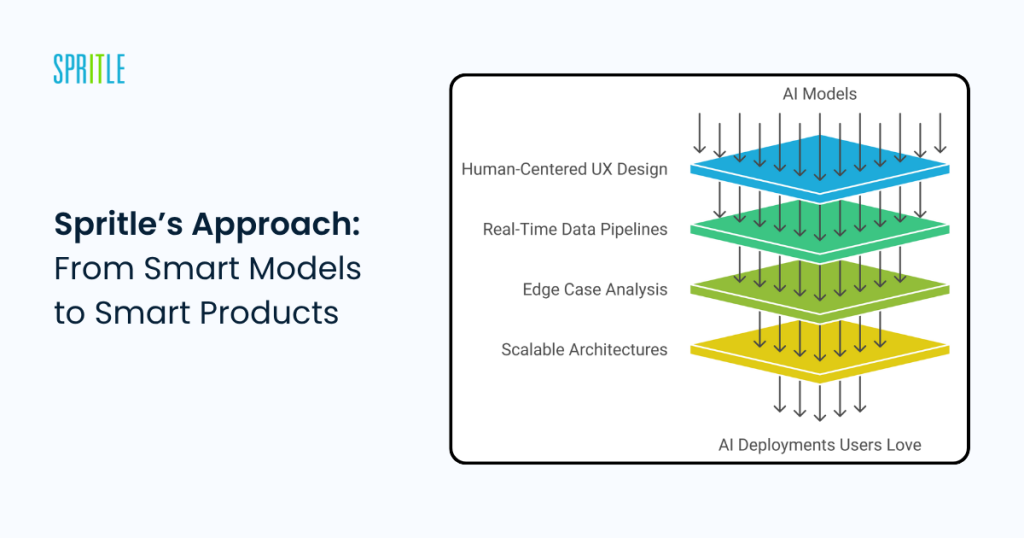

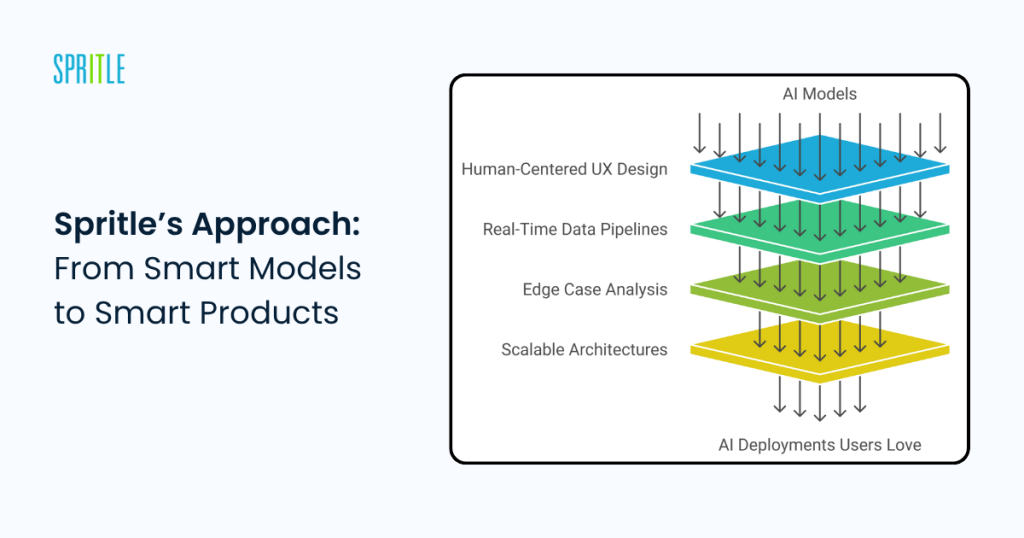

Spritle’s Approach: From Smart Models to Smart Products

At Spritle Software, we don’t just build models — we build AI products that work in the wild.

Our product strategy blends:

- Human-centered UX design for AI workflows

- Reliable, real-time data pipelines that keep models fresh

- Rigorous edge case analysis to safeguard user trust

- Scalable, maintainable architectures for long-term product health

We partner with teams to move from “AI demos” to “AI deployments that users love”.

The Shift in Mindset: Beyond Model Metrics

If you’re leading a SaaS or AI product team, here’s what to reflect on:

- Are you chasing model performance, or user satisfaction?

- Have you mapped the edge cases that could undermine trust?

- Is your data pipeline reliable enough to keep AI predictions valid six months from now?

- Does your UX explain AI behavior, or hide it behind confusing decisions?

The teams that win in AI products are not the ones with the smartest models — they’re the ones with the best product thinking.

The Takeaway: Users Don’t Buy AI — They Buy Outcomes

At the end of the day, your customers don’t pay for a model. They pay for efficiency, clarity, reduced workload, and better decisions.

The real product is the experience, the outcome, and the trust you deliver.

Don’t fall into the “model accuracy trap.” Focus on building products that solve real problems, respect user time, and handle messiness gracefully.

Final Thought: From Model Builders to Problem Solvers

The next time your team celebrates a high-accuracy model, take a step back. Ask the harder question:

“Is this product good enough to make someone’s day easier?”

Because that’s where AI wins — not in test scores, but in real-life smiles.

Ready to turn your smart model into a smart product? We’re here to help.