The explosive growth of artificial intelligence, particularly large language models (LLMs), has revolutionized how businesses operate, from automating customer service to enhancing data analysis. Yet, as enterprises integrate AI into core workflows, a persistent challenge emerges: how to securely and efficiently connect these models to real-world data sources without custom, fragmented integrations. Introduced by Anthropic in November 2024, the Model Context Protocol (MCP) emerges as a potential solution—an open standard designed to act as a universal bridge between AI agents and external systems. Often compared to USB-C for its plug-and-play potential, MCP promises to standardize connections, enabling models to access fresh, relevant data on demand. But is it truly the missing standard that could reshape AI infrastructure? This in-depth article examines MCP’s origins, technical workings, advantages, limitations, real-world applications, and future trajectory, drawing on insights from industry leaders and early implementations as of mid-2025.

Origins and Evolution of MCP

MCP’s development stems from a fundamental limitation in AI systems: their isolation from dynamic, enterprise-grade data. Traditional LLMs rely on pre-trained knowledge or retrieval-augmented generation (RAG), which often involves embedding data into vector databases—a process that’s computationally intensive and prone to staleness. Anthropic recognized this gap, launching MCP as an open-source protocol to foster a collaborative ecosystem. By early 2025, adoption accelerated when rivals like OpenAI integrated it, signaling broad industry consensus.

The protocol builds on a client-server model, with open-source SDKs in languages such as Python, TypeScript, Java, and C# to facilitate rapid development. Pre-built servers for tools like Google Drive, Slack, GitHub, and PostgreSQL allow developers to connect datasets quickly, while companies like Block and Apollo have customized it for proprietary systems. This evolution positions MCP not as a proprietary tool but as a foundational layer, akin to how HTTP standardized web communications, potentially enabling agentic AI—systems that autonomously act on data rather than just process it.

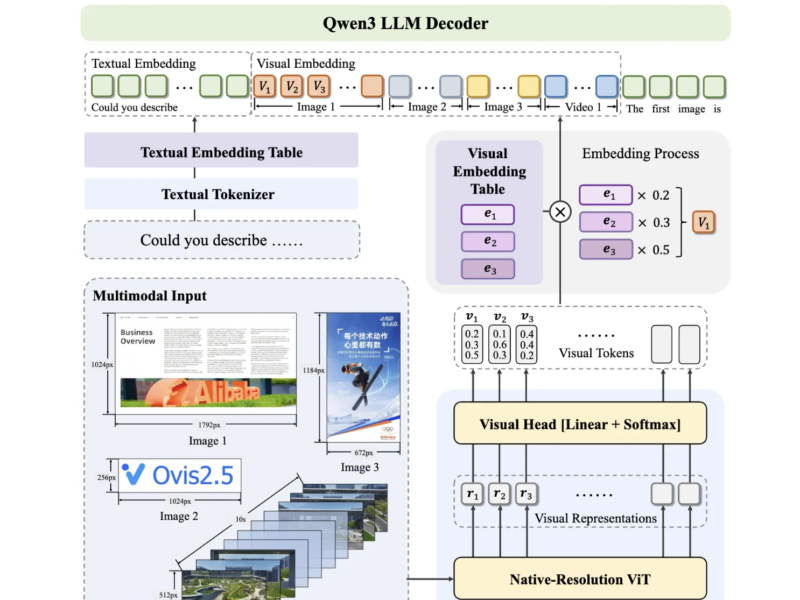

Detailed Mechanics: How MCP Works

At its core, MCP operates through a structured, bi-directional architecture that ensures secure data exchange between AI models and external sources. It comprises three key components: the MCP client (typically an AI application or agent), the MCP host (which routes requests), and MCP servers (which interface with tools or databases).

Step-by-Step Process

- Tool Discovery and Description: The MCP client sends a description of available tools to the model, including parameters and schemas. This allows the LLM to understand what actions are possible, such as querying a CRM or executing a code snippet.

- Request Routing: When the model decides on an action—say, retrieving customer data from a Salesforce instance—the host translates this into a standardized MCP call. It uses protocols like JWT or OIDC for authentication, ensuring only authorized access.

- Data Retrieval and Validation: The server fetches the data, applies custom logic (e.g., error handling or filtering), and returns structured results. MCP supports real-time interactions without pre-indexing, reducing latency compared to traditional RAG.

- Context Integration and Response: The retrieved data is fed back to the model, which generates a response. Features like context validation prevent hallucinations by grounding outputs in verified information.

This workflow maintains state across interactions, allowing complex tasks like creating a GitHub repo, updating a database, and notifying via Slack in sequence. Unlike rigid APIs, MCP accommodates LLMs’ probabilistic nature by providing flexible schemas, minimizing failed calls due to parameter mismatches.

Advantages: Why MCP Could Be the Missing Standard

MCP’s design addresses several pain points in AI infrastructure, offering tangible benefits for scalability and efficiency.

- Seamless Interoperability: By standardizing integrations, MCP eliminates the need for bespoke connectors. Enterprises can expose diverse systems— from ERPs to knowledge bases—as MCP servers, reusable across models and departments. This reusability accelerates deployment, with early reports showing up to 50% faster integration times in pilot projects.

- Enhanced Accuracy and Reduced Hallucinations: LLMs often fabricate responses when lacking context; MCP counters this by delivering precise, real-time data. For instance, in legal queries, hallucination rates drop from 69-88% in ungrounded models to near zero with validated contexts. Components like Context Validation ensure outputs align with enterprise truths, boosting trust in sectors like finance and healthcare.

- Robust Security and Compliance: Built-in enforcers provide granular controls, such as role-based access and data redaction, preventing leakage—a concern for 57% of consumers. In regulated industries, MCP aids adherence to GDPR, HIPAA, and CCPA by keeping data within enterprise boundaries.

- Scalability for Agentic AI: MCP enables no-code or low-code agent development, democratizing AI for non-technical users. Surveys indicate 60% of enterprises plan agent adoption within a year, with MCP facilitating multi-step workflows like automated reporting or customer routing.

Quantitative gains include lower computational costs—avoiding vector embeddings—and improved ROI through fewer integration failures.

Real-World Applications and Case Studies

MCP is already proving its value across industries. In financial services, it grounds LLMs in proprietary data for accurate fraud detection, reducing errors by providing compliant, real-time contexts. Healthcare providers use it to query patient records without exposing PII, ensuring HIPAA compliance while enabling personalized insights. Manufacturing firms leverage MCP for troubleshooting, pulling from technical docs to minimize downtime.

Early adopters like Replit and Sourcegraph integrate it for context-aware coding, where agents access live codebases to generate functional outputs with fewer iterations. Block employs MCP for agentic systems that automate creative tasks, emphasizing its open-source ethos. These cases highlight MCP’s role in transitioning from experimental AI to production-grade deployments, with over 300 enterprises adopting similar frameworks by mid-2025.

Future Implications: Toward a Standardized AI Ecosystem

As AI infrastructure mirrors multicloud complexities, MCP could become the linchpin for hybrid environments, fostering collaboration akin to cloud standards. With thousands of open-source servers available and integrations from Google and others, it’s poised for ubiquity. However, success hinges on mitigating risks and enhancing governance—potentially through community-driven refinements.

In summary, MCP represents a critical advancement, bridging AI’s isolation from real data. While not flawless, its potential to standardize connections makes it a strong candidate for the missing standard in AI infrastructure, empowering more reliable, scalable, and secure applications. As the ecosystem matures, enterprises that adopt it early may gain a competitive edge in an increasingly agentic world.