Introduction

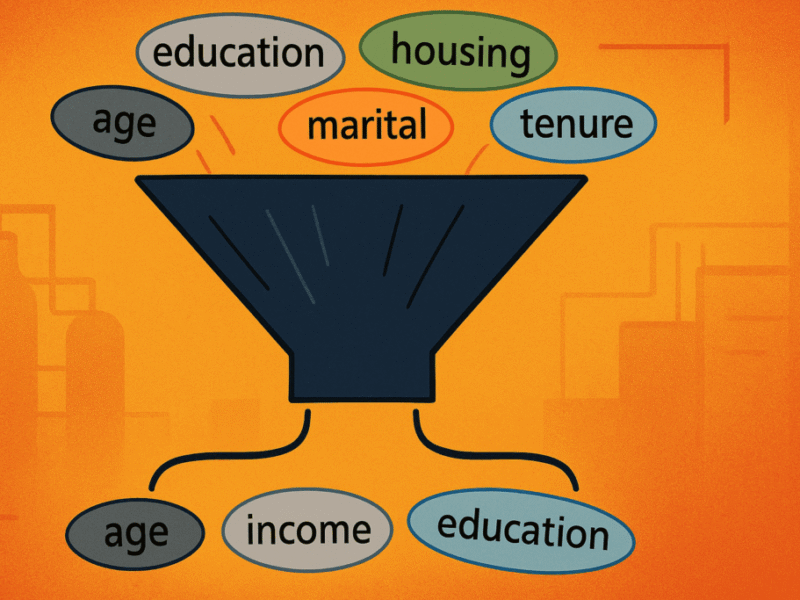

Building internal tools or AI‑powered applications the “traditional” way throws developers into a maze of repetitive, error‑prone tasks. First, they must spin up a dedicated Postgres instance, configure networking, backups, and monitoring, and then spend hours (or days) plumbing that database into the front‑end framework they’re using. On top of that, they have to write custom authentication flows, map granular permissions, and keep those security controls in sync across the UI, API layer, and database. Each piece lives in a different environment: sometimes a managed cloud service, sometimes a self‑hosted VM. So developers also juggle disparate deployment pipelines, environment variables, and credential stores. The result is a fragmented stack where a single change like a schema migration or a new role ripples through multiple systems, demanding manual updates, extensive testing, and constant coordination. All of this overhead distracts developers from the real value‑add: building the product’s core features and intelligence.

With Databricks Lakebase and Databricks Apps, the entire application stack sits together, alongside the lakehouse. Lakebase is a fully managed Postgres database that offers low-latency reads and writes, integrated with the same underlying lakehouse tables that power your analytics and AI workloads. Databricks Apps supplies a serverless runtime for the UI, along with built-in authentication, fine-grained permissions, and governance controls that are automatically applied to the same data that Lakebase serves. This makes it easy to build and deploy apps that combine transactional state, analytics, and AI without stitching together multiple platforms, synchronizing databases, replicating pipelines, or reconciling security policies across systems.

Why Lakebase + Databricks Apps

Lakebase and Databricks Apps work together to simplify full-stack development on the Databricks platform:

- Lakebase gives you a fully managed Postgres database with fast reads, writes, and updates, plus modern features like branching, and point-in-time recovery.

- Databricks Apps provides the serverless runtime for your application frontend, with built-in identity, access control, and integration with Unity Catalog and other lakehouse components.

By combining the two, you can build interactive tools that store and update state in Lakebase, access governed data in the lakehouse, and serve everything through a secure, serverless UI, all without managing separate infrastructure. In the example below, we’ll show how to build a simple holiday request approval app using this setup.

Getting Started: Build a Transactional App with Lakebase

This walkthrough shows how to create a simple Databricks App that helps managers review and approve holiday requests from their team. The app is built with Databricks Apps and uses Lakebase as the backend database to store and update the requests.

Here’s what the solution covers:

- Provision a Lakebase database

Set up a serverless, Postgres OLTP database with a few clicks. - Create a Databricks App

Build an interactive app using a Python framework (like Streamlit or Dash) that reads from and writes to Lakebase. - Configure schema, tables, and access controls

Create the necessary tables and assign fine-grained permissions to the app using the App’s client ID. - Securely connect and interact with Lakebase

Use the Databricks SDK and SQLAlchemy to securely read from and write to Lakebase from your app code.

The walkthrough is designed to get you started quickly with a minimal working example. Later, you can extend it with more advanced configuration.

Step 1: Provision Lakebase

Before building the app, you’ll need to create a Lakebase database. To do this, go to the Compute tab, select OLTP Database, and provide a name and size. This provisions a serverless Lakebase instance. In this example, our database instance is called lakebase-demo-instance.

Step 2: Create a Databricks App and Add Database Access

Now that we have a database, let’s create the Databricks App that will connect to it. You can start from a blank app or choose a template (e.g., Streamlit or Flask). After naming your app, add the Database as a resource. In this example, the pre-created databricks_postgres database is selected.

Adding the Database resource automatically:

- Grants the app CONNECT and CREATE privileges

- Creates a Postgres role tied to the app’s client ID

This role will later be used to grant table-level access.

Step 3: Create a Schema, Table, and Set Permissions

With the database provisioned and the app connected, you can now define the schema and table the app will use.

1. Retrieve the App’s client ID

From the app’s Environment tab, copy the value of the DATABRICKS_CLIENT_ID variable. You’ll need this for the GRANT statements.

2. Open the Lakebase SQL editor

Go to your Lakebase instance and click New Query. This opens the SQL editor with the database endpoint already selected.

3. Run the following SQL:

Please note that while using the SQL editor is a quick and effective way to perform this process, managing database schemas at scale is best handled by dedicated tools that support versioning, collaboration, and automation. Tools like Flyway and Liquibase allow you to track schema changes, integrate with CI/CD pipelines, and ensure your database structure evolves safely alongside your application code.

Step 4: Build the App

With permissions in place, you can now build your app. In this example, the app fetches holiday requests from Lakebase and lets a manager approve or reject them. Updates are written back to the same table.

Step 5: Connect Securely to Lakebase

Use SQLAlchemy and the Databricks SDK to connect your app to Lakebase with secure, token-based authentication. When you add the Lakebase resource, PGHOST and PGUSER are exposed automatically. The SDK handles token caching.

Step 6: Read and Update Data

The following functions read from and update the holiday request table:

The code snippets above can be used in combination with frameworks such as Streamlit, Dash and Flask to pull the data from Lakebase and visualize it in your app. To ensure all necessary dependencies are installed, add the required packages to your app’s requirements.txt file. The packages used in the code snippets are listed below.

Extending the Lakehouse with Lakebase

Lakebase adds transactional capabilities to the lakehouse by integrating a fully managed OLTP database directly into the platform. This reduces the need for external databases or complex pipelines when building applications that require both reads and writes.

Because it’s natively integrated with Databricks, including data synchronization, identity authentication, and network security — just like other data assets in the lakehouse. You don’t need custom ETL or reverse ETL to move data between systems. For example:

- You can serve analytical features back to applications in real time (available today) using the Online Feature Store and synced tables.

- You can synchronize operational data with Delta table, e.g. for historical data analysis (in Private Preview).

These capabilities make it easier to support production-grade use cases like:

- Updating state in AI agents

- Managing real-time workflows (e.g., approvals, task routing)

- Feeding live data into recommendation systems or pricing engines

Lakebase is already being used across industries for applications including personalized recommendations, chatbot applications, and workflow management tools.

What’s Next

If you’re already using Databricks for analytics and AI, Lakebase makes adding real-time interactivity to your applications easier. With support for low-latency transactions, built-in security, and tight integration with Databricks Apps, you can go from prototype to production without leaving the platform.

Summary

Lakebase provides a transactional Postgres database that works seamlessly with Databricks Apps, and provides easy integration with Lakehouse data. It simplifies the development of full-stack data and AI applications by eliminating the need for external OLTP systems or manual integration steps.

In this example, we showed how to:

- Set up a Lakebase instance and configure access

- Create a Databricks App that reads and writes to Lakebase

- Use secure, token-based authentication with minimal setup

- Build a basic app for managing holiday requests using Python and SQL

Lakebase is now in Public Preview. You can try it today directly from your Databricks workspace. For details on usage and pricing, see the Lakebase and Apps documentation.