exciting to see the GenAI industry beginning to move toward standardisation. We might be witnessing something similar to the early days of the internet, when HTTP (HyperText Transfer Protocol) first emerged. When Tim Berners-Lee developed HTTP in 1990, it provided a simple yet extensible protocol that transformed the internet from a specialised research network into the globally accessible World Wide Web. By 1993, web browsers like Mosaic had made HTTP so popular that web traffic quickly outpaced other systems.

One promising step in this direction is MCP (Model Context Protocol), developed by Anthropic. MCP is gaining popularity with its attempts to standardise the interactions between LLMs and external tools or data sources. More recently (the first commit is dated April 2025), a new protocol called ACP (Agent Communication Protocol) appeared. It complements MCP by defining the ways in which agents can communicate with each other.

In this article, I would like to discuss what ACP is, why it can be helpful and how it can be used in practice. We will build a multi-agent AI system for interacting with data.

ACP overview

Before jumping into practice, let’s take a moment to understand the theory behind ACP and how it works under the hood.

ACP (Agent Communication Protocol) is an open protocol designed to address the growing challenge of connecting AI agents, applications, and humans. The current GenAI industry is quite fragmented, with different teams building agents in isolation using various, often incompatible frameworks and technologies. This fragmentation slows down innovation and makes it difficult for agents to collaborate effectively.

To address this challenge, ACP aims to standardise communication between agents via RESTful APIs. The protocol is framework- and technology-agnostic, meaning it can be used with any agentic framework, such as LangChain, CrewAI, smolagents, or others. This flexibility makes it easier to build interoperable systems where agents can seamlessly work together, regardless of how they were originally developed.

This protocol has been developed as an open standard under the Linux Foundation, alongside BeeAI (its reference implementation). One of the key points the team emphasises is that ACP is openly governed and shaped by the community, rather than a group of vendors.

What benefits can ACP bring?

- Easily replaceable agents. With the current pace of innovation in the GenAI space, new cutting-edge technologies are emerging all the time. ACP enables agents to be swapped in production seamlessly, reducing maintenance costs and making it easier to adopt the most advanced tools when they become available.

- Enabling collaboration between multiple agents built on different frameworks. As we know from people management, specialisation often leads to better results. The same applies to agentic systems. The group of agents, each focused on a specific task (like writing Python code or researching the web), can often outperform a single agent trying to do everything. ACP makes it possible for such specialised agents to communicate and work together, even if they are built using different frameworks or technologies.

- New opportunities for partnerships. With a unified standard for agents’ communication, it would be easier for agents to collaborate, sparking new partnerships between different teams within the company or even different companies. Imagine a world where your smart home agent notices the temperature dropping unusually, determines that the heating system has failed, and checks with your utility provider’s agent to confirm there are no planned outages. Finally, it books a technician coordinating the visit with your Google Calendar agent to make sure you’re home. It may sound futuristic, but with ACP, it can be pretty close.

We’ve covered what ACP is and why it matters. The protocol looks quite promising. So, let’s put it to the test and see how it works in practice.

ACP in practice

Let’s try ACP in a classic “talk to data” use case. To use the benefit of ACP being framework agnostic, we will build ACP agents with different frameworks:

- SQL Agent with CrewAI to compose SQL queries

- DB Agent with HuggingFace smolagents to execute those queries.

I won’t go into the details of each framework here, but if you’re curious, I’ve written in-depth articles on both of them:

– “Multi AI Agent Systems 101” about CrewAI,

– “Code Agents: The Future of Agentic AI” about smolagents.

Building DB Agent

Let’s start with the DB agent. As I mentioned earlier, ACP could be complemented by MCP. So, I will use tools from my analytics toolbox through the MCP server. You can find the MCP server implementation on GitHub. For a deeper dive and step-by-step instructions, check my previous article, where I covered MCP in detail.

The code itself is pretty straightforward: we initialise the ACP server and use the @server.agent() decorator to define our agent function. This function expects a list of messages as input and returns a generator.

from collections.abc import AsyncGenerator

from acp_sdk.models import Message, MessagePart

from acp_sdk.server import Context, RunYield, RunYieldResume, Server

from smolagents import LiteLLMModel,ToolCallingAgent, ToolCollection

import logging

from dotenv import load_dotenv

from mcp import StdioServerParameters

load_dotenv()

# initialise ACP server

server = Server()

# initialise LLM

model = LiteLLMModel(

model_id="openai/gpt-4o-mini",

max_tokens=2048

)

# define config for MCP server to connect

server_parameters = StdioServerParameters(

command="uv",

args=[

"--directory",

"/Users/marie/Documents/github/mcp-analyst-toolkit/src/mcp_server",

"run",

"server.py"

],

env=None

)

@server.agent()

async def db_agent(input: list[Message], context: Context) -> AsyncGenerator[RunYield, RunYieldResume]:

"This is a CodeAgent can execute SQL queries against ClickHouse database."

with ToolCollection.from_mcp(server_parameters, trust_remote_code=True) as tool_collection:

agent = ToolCallingAgent(tools=[*tool_collection.tools], model=model)

question = input[0].parts[0].content

response = agent.run(question)

yield Message(parts=[MessagePart(content=str(response))])

if __name__ == "__main__":

server.run(port=8001)We will also need to set up a Python environment. I will be using uv package manager for this.

uv init --name acp-sql-agent

uv venv

source .venv/bin/activate

uv add acp-sdk "smolagents[litellm]" python-dotenv mcp "smolagents[mcp]" ipykernelThen, we can run the agent using the following command.

uv run db_agent.pyIf everything is set up correctly, you will see a server running on port 8001. We will need an ACP client to verify that it’s working as expected. Bear with me, we will test it shortly.

Building the SQL agent

Before that, let’s build a SQL agent that will compose queries. We will use the CrewAI framework for this. Our agent will reference the knowledge base of questions and queries to generate answers. So, we will equip it with a RAG (Retrieval Augmented Generation) tool.

First, we will initialise the RAG tool and load the reference file clickhouse_queries.txt. Next, we will create a CrewAI agent by specifying its role, goal and backstory. Finally, we’ll create a task and bundle everything together into a Crew object.

from crewai import Crew, Task, Agent, LLM

from crewai.tools import BaseTool

from crewai_tools import RagTool

from collections.abc import AsyncGenerator

from acp_sdk.models import Message, MessagePart

from acp_sdk.server import RunYield, RunYieldResume, Server

import json

import os

from datetime import datetime

from typing import Type

from pydantic import BaseModel, Field

import nest_asyncio

nest_asyncio.apply()

# config for RAG tool

config = {

"llm": {

"provider": "openai",

"config": {

"model": "gpt-4o-mini",

}

},

"embedding_model": {

"provider": "openai",

"config": {

"model": "text-embedding-ada-002"

}

}

}

# initialise tool

rag_tool = RagTool(

config=config,

chunk_size=1200,

chunk_overlap=200)

rag_tool.add("clickhouse_queries.txt")

# initialise ACP server

server = Server()

# initialise LLM

llm = LLM(model="openai/gpt-4o-mini", max_tokens=2048)

@server.agent()

async def sql_agent(input: list[Message]) -> AsyncGenerator[RunYield, RunYieldResume]:

"This agent knows the database schema and can return SQL queries to answer questions about the data."

# create agent

sql_agent = Agent(

role="Senior SQL analyst",

goal="Write SQL queries to answer questions about the e-commerce analytics database.",

backstory="""

You are an expert in ClickHouse SQL queries with over 10 years of experience. You are familiar with the e-commerce analytics database schema and can write optimized queries to extract insights.

## Database Schema

You are working with an e-commerce analytics database containing the following tables:

### Table: ecommerce.users

**Description:** Customer information for the online shop

**Primary Key:** user_id

**Fields:**

- user_id (Int64) - Unique customer identifier (e.g., 1000004, 3000004)

- country (String) - Customer's country of residence (e.g., "Netherlands", "United Kingdom")

- is_active (Int8) - Customer status: 1 = active, 0 = inactive

- age (Int32) - Customer age in full years (e.g., 31, 72)

### Table: ecommerce.sessions

**Description:** User session data and transaction records

**Primary Key:** session_id

**Foreign Key:** user_id (references ecommerce.users.user_id)

**Fields:**

- user_id (Int64) - Customer identifier linking to users table (e.g., 1000004, 3000004)

- session_id (Int64) - Unique session identifier (e.g., 106, 1023)

- action_date (Date) - Session start date (e.g., "2021-01-03", "2024-12-02")

- session_duration (Int32) - Session duration in seconds (e.g., 125, 49)

- os (String) - Operating system used (e.g., "Windows", "Android", "iOS", "MacOS")

- browser (String) - Browser used (e.g., "Chrome", "Safari", "Firefox", "Edge")

- is_fraud (Int8) - Fraud indicator: 1 = fraudulent session, 0 = legitimate

- revenue (Float64) - Purchase amount in USD (0.0 for non-purchase sessions, >0 for purchases)

## ClickHouse-Specific Guidelines

1. **Use ClickHouse-optimized functions:**

- uniqExact() for precise unique counts

- uniqExactIf() for conditional unique counts

- quantile() functions for percentiles

- Date functions: toStartOfMonth(), toStartOfYear(), today()

2. **Query formatting requirements:**

- Always end queries with "format TabSeparatedWithNames"

- Use meaningful column aliases

- Use proper JOIN syntax when combining tables

- Wrap date literals in quotes (e.g., '2024-01-01')

3. **Performance considerations:**

- Use appropriate WHERE clauses to filter data

- Consider using HAVING for post-aggregation filtering

- Use LIMIT when finding top/bottom results

4. **Data interpretation:**

- revenue > 0 indicates a purchase session

- revenue = 0 indicates a browsing session without purchase

- is_fraud = 1 sessions should typically be excluded from business metrics unless specifically analyzing fraud

## Response Format

Provide only the SQL query as your answer. Include brief reasoning in comments if the query logic is complex.

""",

verbose=True,

allow_delegation=False,

llm=llm,

tools=[rag_tool],

max_retry_limit=5

)

# create task

task1 = Task(

description=input[0].parts[0].content,

expected_output = "Reliable SQL query that answers the question based on the e-commerce analytics database schema.",

agent=sql_agent

)

# create crew

crew = Crew(agents=[sql_agent], tasks=[task1], verbose=True)

# execute agent

task_output = await crew.kickoff_async()

yield Message(parts=[MessagePart(content=str(task_output))])

if __name__ == "__main__":

server.run(port=8002)We will also need to add any missing packages to uv before running the server.

uv add crewai crewai_tools nest-asyncio

uv run sql_agent.pyNow, the second agent is running on port 8002. With both servers up and running, it’s time to check whether they are working properly.

Calling an ACP agent with a client

Now that we’re ready to test our agents, we’ll use the ACP client to run them synchronously. For that, we need to initialise a Client with the server URL and use the run_sync function specifying the agent’s name and input.

import os

import nest_asyncio

nest_asyncio.apply()

from acp_sdk.client import Client

import asyncio

# Set your OpenAI API key here (or use environment variable)

# os.environ["OPENAI_API_KEY"] = "your-api-key-here"

async def example() -> None:

async with Client(base_url="http://localhost:8001") as client1:

run1 = await client1.run_sync(

agent="db_agent", input="select 1 as test"

)

print(' DB agent response:')

print(run1.output[0].parts[0].content)

async with Client(base_url="http://localhost:8002") as client2:

run2 = await client2.run_sync(

agent="sql_agent", input="How many customers did we have in May 2024?"

)

print(' SQL agent response:')

print(run2.output[0].parts[0].content)

if __name__ == "__main__":

asyncio.run(example())

# DB agent response:

# 1

# SQL agent response:

# ```

# SELECT COUNT(DISTINCT user_id) AS total_customers

# FROM ecommerce.users

# WHERE is_active = 1

# AND user_id IN (

# SELECT DISTINCT user_id

# FROM ecommerce.sessions

# WHERE action_date >= '2024-05-01' AND action_date < '2024-06-01'

# )

# format TabSeparatedWithNames We received expected results from both servers, so it looks like everything is working as intended.

💡Tip: You can check the full execution logs in the terminal where each server is running.

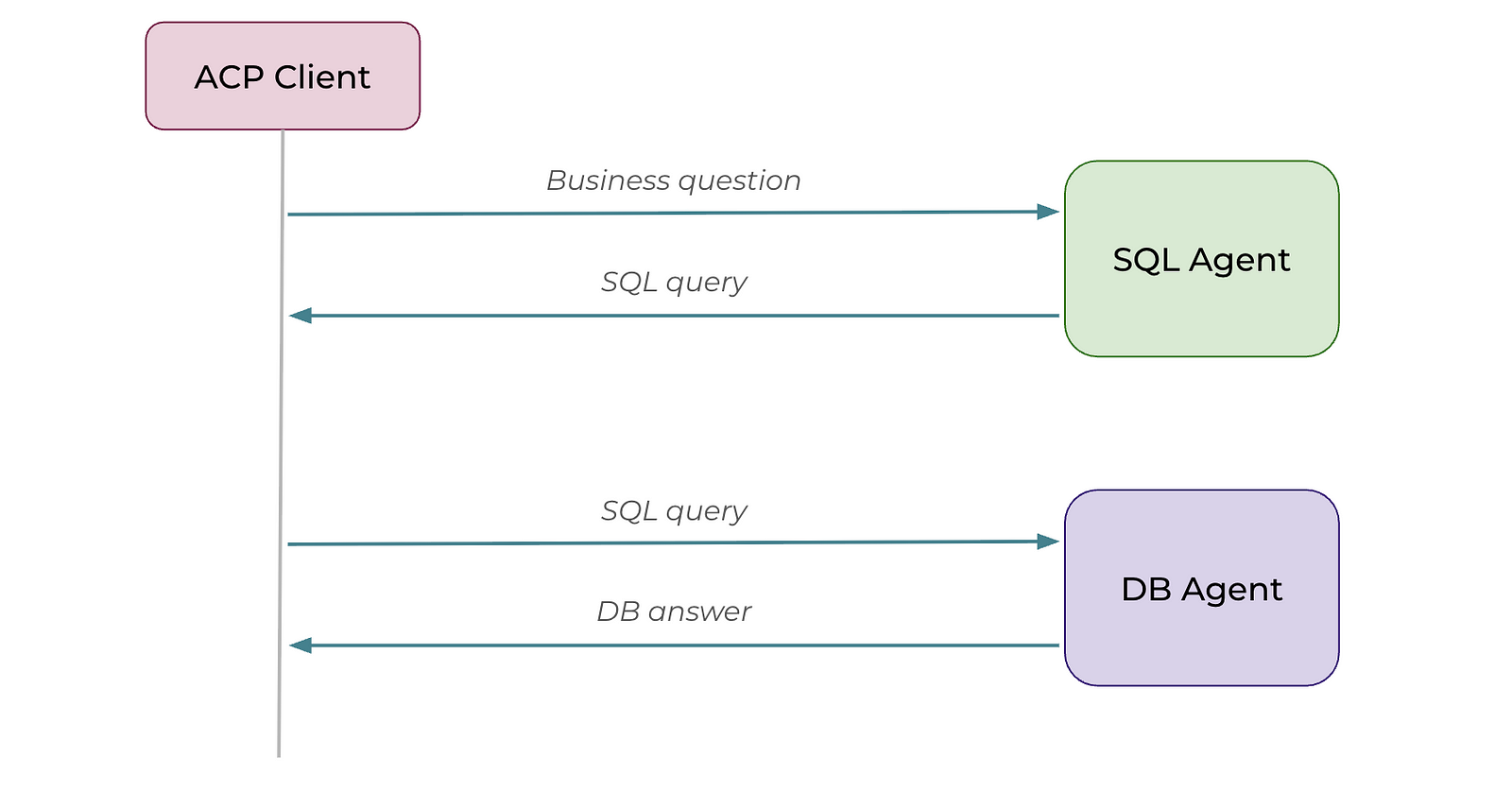

Chaining agents sequentially

To answer actual questions from customers, we need both agents to work together. Let’s chain them one after the other. So, we will first call the SQL agent and then pass the generated SQL query to the DB agent for execution.

Here’s the code to chain the agents. It’s quite similar to what we used earlier to test each server individually. The main difference is that we now pass the output from the SQL agent directly into the DB agent.

async def example() -> None:

async with Client(base_url="http://localhost:8001") as db_agent, Client(base_url="http://localhost:8002") as sql_agent:

question = 'How many customers did we have in May 2024?'

sql_query = await sql_agent.run_sync(

agent="sql_agent", input=question

)

print('SQL query generated by SQL agent:')

print(sql_query.output[0].parts[0].content)

answer = await db_agent.run_sync(

agent="db_agent", input=sql_query.output[0].parts[0].content

)

print('Answer from DB agent:')

print(answer.output[0].parts[0].content)

asyncio.run(example())Everything worked smoothly, and we received the expected output.

SQL query generated by SQL agent:

Thought: I need to craft a SQL query to count the number of unique customers

who were active in May 2024 based on their sessions.

```sql

SELECT COUNT(DISTINCT u.user_id) AS active_customers

FROM ecommerce.users AS u

JOIN ecommerce.sessions AS s ON u.user_id = s.user_id

WHERE u.is_active = 1

AND s.action_date >= '2024-05-01'

AND s.action_date < '2024-06-01'

FORMAT TabSeparatedWithNames

```

Answer from DB agent:

234544Router pattern

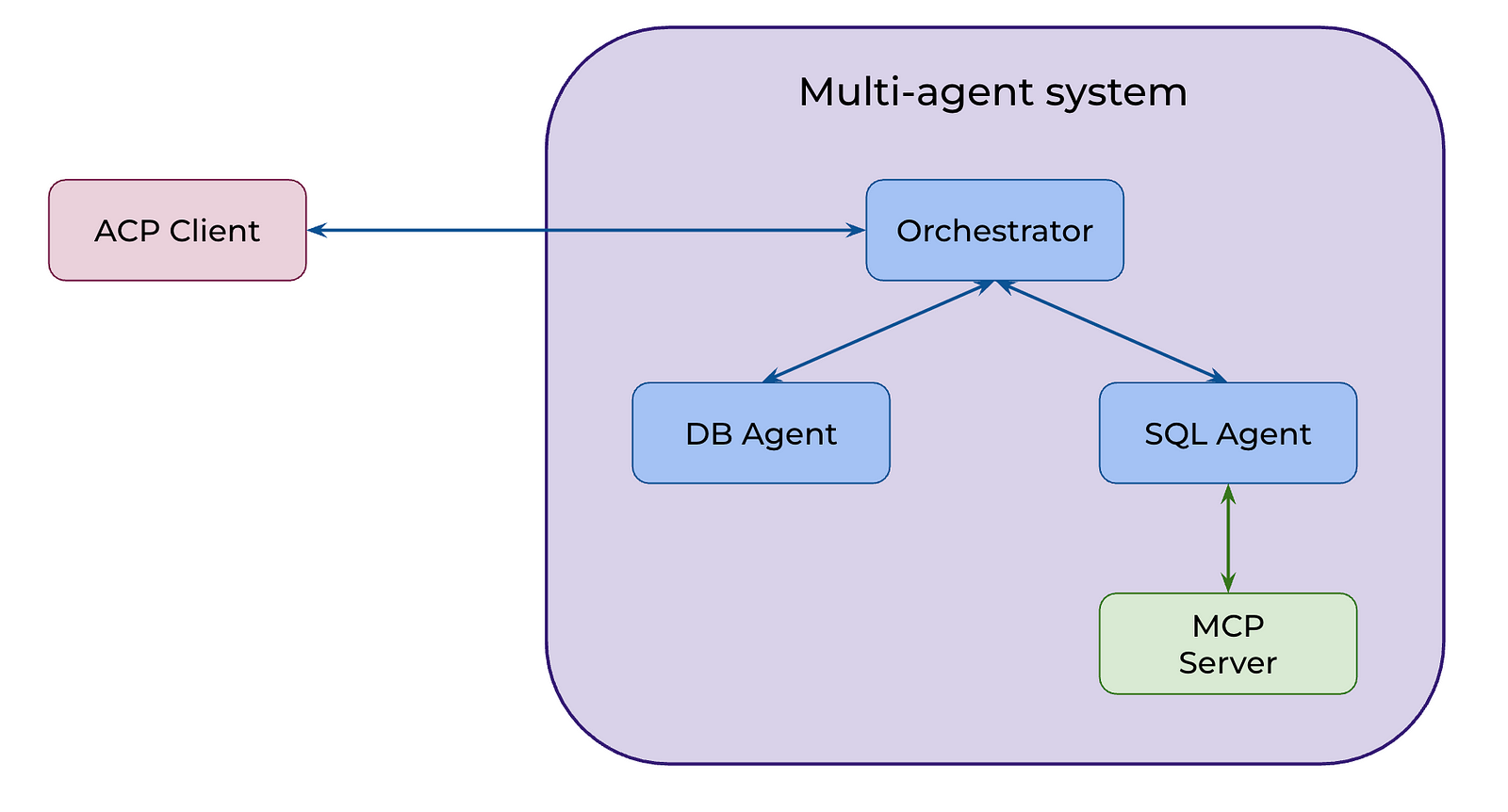

In some use cases, the path is static and well-defined, and we can chain agents directly as we did earlier. However, more often we expect LLM agents to reason independently and decide which tools or agents to use to achieve a goal. To solve for such cases, we will implement a router pattern using ACP. We will create a new agent (the orchestrator ) that can delegate tasks to DB and SQL agents.

We will start by adding a reference implementation beeai_framework to the package manager.

uv add beeai_frameworkTo enable our orchestrator to call the SQL and DB agents, we will wrap them as tools. This way, the orchestrator can treat them like any other tool and invoke them when needed.

Let’s start with the SQL agent. It’s primarily boilerplate code: we define the input and output fields using Pydantic and then call the agent in the _run function.

from pydantic import BaseModel, Field

from acp_sdk import Message

from acp_sdk.client import Client

from acp_sdk.models import MessagePart

from beeai_framework.tools.tool import Tool

from beeai_framework.tools.types import ToolRunOptions

from beeai_framework.context import RunContext

from beeai_framework.emitter import Emitter

from beeai_framework.tools import ToolOutput

from beeai_framework.utils.strings import to_json

# helper function

async def run_agent(agent: str, input: str) -> list[Message]:

async with Client(base_url="http://localhost:8002") as client:

run = await client.run_sync(

agent=agent, input=[Message(parts=[MessagePart(content=input, content_type="text/plain")])]

)

return run.output

class SqlQueryToolInput(BaseModel):

question: str = Field(description="The question to answer using SQL queries against the e-commerce analytics database")

class SqlQueryToolResult(BaseModel):

sql_query: str = Field(description="The SQL query that answers the question")

class SqlQueryToolOutput(ToolOutput):

result: SqlQueryToolResult = Field(description="SQL query result")

def get_text_content(self) -> str:

return to_json(self.result)

def is_empty(self) -> bool:

return self.result.sql_query.strip() == ""

def __init__(self, result: SqlQueryToolResult) -> None:

super().__init__()

self.result = result

class SqlQueryTool(Tool[SqlQueryToolInput, ToolRunOptions, SqlQueryToolOutput]):

name = "SQL Query Generator"

description = "Generate SQL queries to answer questions about the e-commerce analytics database"

input_schema = SqlQueryToolInput

def _create_emitter(self) -> Emitter:

return Emitter.root().child(

namespace=["tool", "sql_query"],

creator=self,

)

async def _run(self, input: SqlQueryToolInput, options: ToolRunOptions | None, context: RunContext) -> SqlQueryToolOutput:

result = await run_agent("sql_agent", input.question)

return SqlQueryToolOutput(result=SqlQueryToolResult(sql_query=str(result[0])))Let’s follow the same approach with the DB agent.

from pydantic import BaseModel, Field

from acp_sdk import Message

from acp_sdk.client import Client

from acp_sdk.models import MessagePart

from beeai_framework.tools.tool import Tool

from beeai_framework.tools.types import ToolRunOptions

from beeai_framework.context import RunContext

from beeai_framework.emitter import Emitter

from beeai_framework.tools import ToolOutput

from beeai_framework.utils.strings import to_json

async def run_agent(agent: str, input: str) -> list[Message]:

async with Client(base_url="http://localhost:8001") as client:

run = await client.run_sync(

agent=agent, input=[Message(parts=[MessagePart(content=input, content_type="text/plain")])]

)

return run.output

class DatabaseQueryToolInput(BaseModel):

query: str = Field(description="The SQL query or question to execute against the ClickHouse database")

class DatabaseQueryToolResult(BaseModel):

result: str = Field(description="The result of the database query execution")

class DatabaseQueryToolOutput(ToolOutput):

result: DatabaseQueryToolResult = Field(description="Database query execution result")

def get_text_content(self) -> str:

return to_json(self.result)

def is_empty(self) -> bool:

return self.result.result.strip() == ""

def __init__(self, result: DatabaseQueryToolResult) -> None:

super().__init__()

self.result = result

class DatabaseQueryTool(Tool[DatabaseQueryToolInput, ToolRunOptions, DatabaseQueryToolOutput]):

name = "Database Query Executor"

description = "Execute SQL queries and questions against the ClickHouse database"

input_schema = DatabaseQueryToolInput

def _create_emitter(self) -> Emitter:

return Emitter.root().child(

namespace=["tool", "database_query"],

creator=self,

)

async def _run(self, input: DatabaseQueryToolInput, options: ToolRunOptions | None, context: RunContext) -> DatabaseQueryToolOutput:

result = await run_agent("db_agent", input.query)

return DatabaseQueryToolOutput(result=DatabaseQueryToolResult(result=str(result[0])))Now let’s put together the main agent that will be orchestrating the others as tools. We will use the ReAct agent implementation from the BeeAI framework for the orchestrator. I’ve also added some extra logging to the tool wrappers around our DB and SQL agents, so that we can see all the information about the calls.

from collections.abc import AsyncGenerator

from acp_sdk import Message

from acp_sdk.models import MessagePart

from acp_sdk.server import Context, Server

from beeai_framework.backend.chat import ChatModel

from beeai_framework.agents.react import ReActAgent

from beeai_framework.memory import TokenMemory

from beeai_framework.utils.dicts import exclude_none

from sql_tool import SqlQueryTool

from db_tool import DatabaseQueryTool

import os

import logging

# Configure logging

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

# Only add handler if it doesn't already exist

if not logger.handlers:

handler = logging.StreamHandler()

handler.setLevel(logging.INFO)

formatter = logging.Formatter('ORCHESTRATOR - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

logger.addHandler(handler)

# Prevent propagation to avoid duplicate messages

logger.propagate = False

# Wrapped our tools with additional logging for tracebility

class LoggingSqlQueryTool(SqlQueryTool):

async def _run(self, input, options, context):

logger.info(f"🔍 SQL Tool Request: {input.question}")

result = await super()._run(input, options, context)

logger.info(f"📝 SQL Tool Response: {result.result.sql_query}")

return result

class LoggingDatabaseQueryTool(DatabaseQueryTool):

async def _run(self, input, options, context):

logger.info(f"🗄️ Database Tool Request: {input.query}")

result = await super()._run(input, options, context)

logger.info(f"📊 Database Tool Response: {result.result.result}...")

return result

server = Server()

@server.agent(name="orchestrator")

async def orchestrator(input: list[Message], context: Context) -> AsyncGenerator:

logger.info(f"🚀 Orchestrator started with input: {input[0].parts[0].content}")

llm = ChatModel.from_name("openai:gpt-4o-mini")

agent = ReActAgent(

llm=llm,

tools=[LoggingSqlQueryTool(), LoggingDatabaseQueryTool()],

templates={

"system": lambda template: template.update(

defaults=exclude_none({

"instructions": """

You are an expert data analyst assistant that helps users analyze e-commerce data.

You have access to two tools:

1. SqlQueryTool - Use this to generate SQL queries from natural language questions about the e-commerce database

2. DatabaseQueryTool - Use this to execute SQL queries directly against the ClickHouse database

The database contains two main tables:

- ecommerce.users (customer information)

- ecommerce.sessions (user sessions and transactions)

When a user asks a question:

1. First, use SqlQueryTool to generate the appropriate SQL query

2. Then, use DatabaseQueryTool to execute that query and get the results

3. Present the results in a clear, understandable format

Always provide context about what the data shows and any insights you can derive.

""",

"role": "system"

})

)

}, memory=TokenMemory(llm))

prompt = (str(input[0]))

logger.info(f"🤖 Running ReAct agent with prompt: {prompt}")

response = await agent.run(prompt)

logger.info(f"✅ Orchestrator completed. Response length: {len(response.result.text)} characters")

logger.info(f"📤 Final response: {response.result.text}...")

yield Message(parts=[MessagePart(content=response.result.text)])

if __name__ == "__main__":

server.run(port=8003)Now, just like before, we can run the orchestrator agent using the ACP client to see the result.

async def router_example() -> None:

async with Client(base_url="http://localhost:8003") as orchestrator_client:

question = 'How many customers did we have in May 2024?'

response = await orchestrator_client.run_sync(

agent="orchestrator", input=question

)

print('Orchestrator response:')

# Debug: Print the response structure

print(f"Response type: {type(response)}")

print(f"Response output length: {len(response.output) if hasattr(response, 'output') else 'No output attribute'}")

if response.output and len(response.output) > 0:

print(response.output[0].parts[0].content)

else:

print("No response received from orchestrator")

print(f"Full response: {response}")

asyncio.run(router_example())

# In May 2024, we had 234,544 unique active customers.Our system worked well, and we got the expected result. Good job!

Let’s see how it worked under the hood by checking the logs from the orchestrator server. The router first invoked the SQL agent as a SQL tool. Then, it used the returned query to call the DB agent. Finally, it produced the final answer.

ORCHESTRATOR - INFO - 🚀 Orchestrator started with input: How many customers did we have in May 2024?

ORCHESTRATOR - INFO - 🤖 Running ReAct agent with prompt: How many customers did we have in May 2024?

ORCHESTRATOR - INFO - 🔍 SQL Tool Request: How many customers did we have in May 2024?

ORCHESTRATOR - INFO - 📝 SQL Tool Response:

SELECT COUNT(uniqExact(u.user_id)) AS active_customers

FROM ecommerce.users AS u

JOIN ecommerce.sessions AS s ON u.user_id = s.user_id

WHERE u.is_active = 1

AND s.action_date >= '2024-05-01'

AND s.action_date < '2024-06-01'

FORMAT TabSeparatedWithNames

ORCHESTRATOR - INFO - 🗄️ Database Tool Request:

SELECT COUNT(uniqExact(u.user_id)) AS active_customers

FROM ecommerce.users AS u

JOIN ecommerce.sessions AS s ON u.user_id = s.user_id

WHERE u.is_active = 1

AND s.action_date >= '2024-05-01'

AND s.action_date < '2024-06-01'

FORMAT TabSeparatedWithNames

ORCHESTRATOR - INFO - 📊 Database Tool Response: 234544...

ORCHESTRATOR - INFO - ✅ Orchestrator completed. Response length: 52 characters

ORCHESTRATOR - INFO - 📤 Final response: In May 2024, we had 234,544 unique active customers....Thanks to the extra logging we added, we can now trace all the calls made by the orchestrator.

You can find the full code on GitHub.

Summary

In this article, we’ve explored the ACP protocol and its capabilities. Here’s the quick recap of the key points:

- ACP (Agent Communication Protocol) is an open protocol that aims to standardise communication between agents. It complements MCP, which handles interactions between agents and external tools and data sources.

- ACP follows a client-server architecture and uses RESTful APIs.

- The protocol is technology- and framework-agnostic, allowing you to build interoperable systems and create new collaborations between agents seamlessly.

- With ACP, you can implement a wide range of agent interactions, from simple chaining in well-defined workflows to the router pattern, where an orchestrator can delegate tasks dynamically to other agents.

Thank you for reading. I hope this article was insightful. Remember Einstein’s advice: “The important thing is not to stop questioning. Curiosity has its own reason for existing.” May your curiosity lead you to your next great insight.

Reference

This article is inspired by the “ACP: Agent Communication Protocol“ short course from DeepLearning.AI.