On August 8, 2025, Roblox introduced Sentinel, an open-sourced artificial intelligence system designed to detect potential child exploitation patterns within online chats, addressing escalating criticism and legal challenges regarding platform safety.

Roblox, reporting over 111 million monthly active users, indicated that Sentinel has already assisted in identifying hundreds of potential child exploitation cases, which were subsequently reported to law enforcement agencies. Matt Kaufman, Roblox’s chief safety officer, detailed how the company’s previous protective measures, such as filters for profanity and abusive language, were limited to analyzing individual lines or short sequences of text. Kaufman stated, “But when you’re thinking about things related to child endangerment or grooming, the types of behaviors you’re looking at manifest over a very long period of time.”

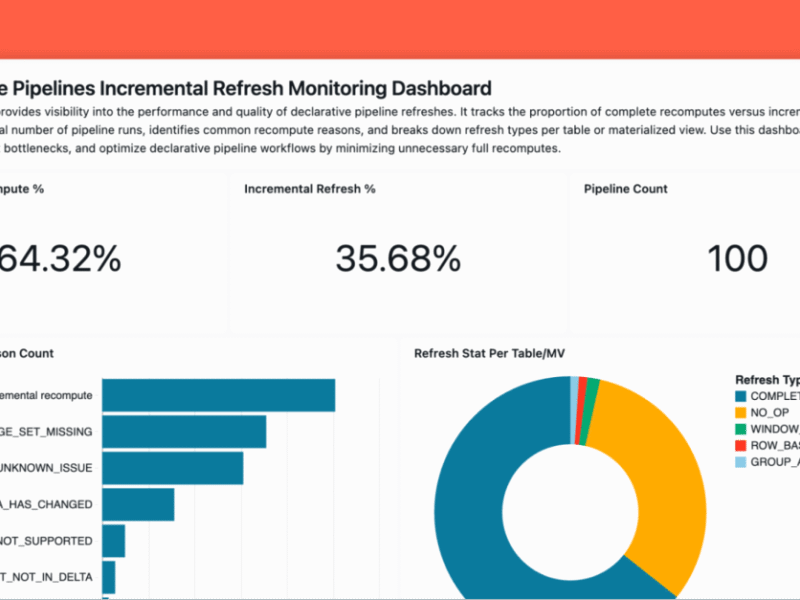

Sentinel is engineered to identify behavioral patterns within conversations that unfold over extended periods, rather than focusing on isolated words or phrases. The system processes approximately 6 billion chat messages daily, analyzing them in one-minute snapshots to assess context. To facilitate this analysis, Roblox engineers developed two distinct indexes. One index comprises examples of benign, harmless chat interactions, while the second index contains messages that have been identified as violating child safety guidelines.

Naren Koneru, vice president of engineering for trust and safety at Roblox, explained that new content is continuously incorporated into both indexes to refine the AI model’s detection capabilities. Koneru stated, “That index gets better as we detect more bad actors, we just continuously update that index.” Koneru added, “Then we have another sample of what does a normal, regular user do?”

The system monitors a user’s ongoing interactions to determine the trajectory of their behavior, assessing whether it aligns with safe conduct or indicates a progression toward risky activities. Koneru noted, “It doesn’t happen on one message because you just send one message, but it happens because of all of your days’ interactions are leading towards one of these two.” If Sentinel flags a user for further scrutiny, human moderators conduct an in-depth review, examining the user’s complete chat history, their list of friends, and the games they have engaged with on the platform. When deemed necessary, Roblox escalates these cases to law enforcement authorities and the National Center for Missing and Exploited Children.

The introduction of Sentinel occurs amidst ongoing legal challenges against Roblox. A lawsuit filed in Iowa the previous month alleges that a 13-year-old girl was contacted by an adult predator via the Roblox platform, subsequently abducted, and trafficked across multiple states. The lawsuit asserts that the platform’s design facilitates vulnerability for minors. Roblox maintains policies prohibiting the dissemination of personal information, images, and videos within its chat functions.

Direct messaging for users under 13 is restricted unless explicit parental consent is provided. Roblox monitors chat communications for safety violations, a capability enabled by the fact that chats on the platform are not end-to-end encrypted. The company acknowledges that no system can guarantee absolute protection but argues that AI advancements, such as Sentinel, substantially improve the likelihood of early detection.