has collected data on the outcomes of patients who have acquired “Pathogen A” responsible for an infectious respiratory illness. Available are 8 features of each patient and the outcome: (a) treated at home and recovered, (b) hospitalized and recovered, or (c) died.

It has proven trivial to train a neural net to predict one of the three outcomes from the 8 features with almost complete accuracy. However, the health authorities would like to predict something that was not captured: From the patients who can be treated at home, who are the ones who are most at danger of having to go to hospital? And from the patients who are predicted to be hospitalized, who are the ones who are most at danger of not surviving the infection? Can we get a numeric score that represents how serious the infection will be?

In this note I will cover a neural net with a bottleneck and a special head to learn a scoring system from a few categories, and cover some properties of small neural networks one is likely to encounter. The accompanying code can be found at https://codeberg.org/csirmaz/category-scoring.

The dataset

To be able to illustrate the work, I developed a toy example, which is a non-linear but deterministic piece of code calculating the outcome from the 8 features. The calculation is for illustration only — it is not supposed to be faithful to the science; the names of the features used were chosen merely to be in keeping with the medical example. The 8 features used in this note are:

- Previous infection with Pathogen A (boolean)

- Previous infection with Pathogen B (boolean)

- Acute / current infection with Pathogen B (boolean)

- Cancer diagnosis (boolean)

- Weight deviation from average, arbitrary unit (-100 ≤ x ≤ 100)

- Age, years (0 ≤ x ≤ 100)

- Blood pressure deviation from average, arbitrary unit (0 ≤ x ≤ 100)

- Years smoked (0 ≤ x ≤ ~88)

When generating sample data, the features are chosen independently and from a uniform distribution, except for years smoked, which depends on the age, and a cohort of non-smokers (50%) was built in. We checked that with this sampling the three outcomes occur with roughly equal probability, and measured the mean and variance of the number of years smoked so we could normalize all the inputs to zero mean unit variance.

As an illustration of the toy example, below is a plot of the outcomes with the weight on the horizontal axis and age on the vertical axis, and other parameters fixed. “o” stands for hospitalization and “+” for death.

....................

....................

....................

....................

...............ooooo

............oooooooo

............oooooooo

............oooooooo

............oooooooo

............oooooooo

............ooooooo+

...........ooooooo++

...........oooooo+++

...........oooooo+++

...........ooooo++++

.......oooooooo+++++

..oooooooooooo++++++

ooooooooooooo+++++++

oooooooooooo++++++++

ooooooooooo+++++++++A classic classifier

The data is nonlinear but very neat, and so it is no surprise that a small classifier network can learn it to 98-99% validation accuracy. Launch train.py --classifier to train a simple neural network with 6 layers (each 8 wide) and ReLU activation, defined in ScoringModel.build_classifier_model().

But how to train a scoring system?

Our aim is then to train a system that, given the 8 features as inputs, can produce a score corresponding to the danger the patient is in when infected with Pathogen A. The complication is that we have no scores available in our training data, only the three outcomes (categories). To ensure that the scoring system is meaningful, we would like certain score ranges to correspond to the three main outcomes.

The first thing someone may try is to assign a numeric value to each category, like 0 to home treatment, 1 to hospitalization and 2 to death, and use it as the target. Then set up a neural network with a single output, and train it with e.g. MSE loss.

The problem with this approach is that the model will learn to contort (condense and expand) the projection of the inputs around the three targets, so ultimately the model will always return a value close to 0, 1 or 2. You can try this by running train.py --predict-score which trains a model with 2 dense layers with ReLU activations and a final dense layer with a single output, defined in ScoringModel.build_predict_score_model().

As can be seen in the following histogram of the output of the model on a random batch of inputs, it is indeed what is happening – and this is with 2 layers only.

..................................................#.........

..................................................#.........

.........#........................................#.........

.........#........................................#.........

.........#........................................#.........

.........#...................#....................#.........

.........#...................#...................##.........

.........#...................#...................##.........

.........###....#............##.#................##.........

........####.#.##.#..#..##.####.##..........#...###.........Step 1: A low-capacity network

To avoid this from happening and get a more continuous score, we want to drastically reduce the capacity of the network to contort the inputs. We will go to the extreme and use a linear regression — in a previous TDS article I already described how to use the components offered by Keras to “train” one. We will reuse that idea here — and build a “degenerate” neural network out of a single dense layer with no activation. This will allow the score to move more in line with the inputs, and also has the advantage that the resulting network is highly interpretable, as it simply provides a weight for each input with the resulting score being their linear combination.

However, with this simplification, the model loses all ability to condense and expand the result to match the target scores for each category. It will try to do so, but especially with more output categories, there is no guarantee that they will occur at regular intervals in any linear combination of the inputs.

We want to enable the model to determine the best thresholds between the categories, that is, to make the thresholds trainable parameters. This is where the “category approximator head” comes in.

Step 2: A category approximator head

In order to be able to train the model using the categories as targets, we add a head that learns to predict the category based on the score. Our aim is to simply establish two thresholds (for our three categories), t0 and t1 such that

- if the score < t0, then we predict treatment at home and recovery,

- if t0 < score < t1, then we predict treatment in hospital and recovery,

- if t1 < score, then we predict that the patient does not survive.

The model takes the shape of an encoder-decoder, where the encoder part produces the score, and the decoder part allows comparing and training the score against the categories.

One approach is to add a dense layer on top of the score, with a single input and as many outputs as the categories. This can learn the thresholds, and predict the probabilities of each category via softmax. Training then can happen as usual using a categorical cross-entropy loss.

Clearly, the dense layer won’t learn the thresholds directly; instead, it will learn N weights and N biases given N output categories. So let’s figure out how to get the thresholds from these.

Step 3: Extracting the thresholds

Notice that the output of the softmax layer is the vector of probabilities for each category; the predicted category is the one with the highest probability. Furthermore, softmax works in a way that it always maps the largest input value to the largest probability. Therefore, the largest output of the dense layer corresponds to the category that it predicts based on the incoming score.

If the dense layer has learnt the weights [w1, w2, w3] and the biases [b1, b2, b3], then its outputs are

o1 = w1*score + b1

o2 = w2*score + b2

o3 = w3*score + b3

These are all just straight lines as a function of the incoming score (e.g. y = w1*x + b1), and whichever is at the top at a given score is the winning category. Here is a quick illustration:

The thresholds are then the intersection points between the neighboring lines. Assuming the order of categories to be o1 (home) → o2 (hospital) → o3 (death), we need to solve the o1 = o2 and o2 = o3 equations, yielding

t0 = (b2 – b1) / (w1 – w2)

t1 = (b3 – b2) / (w2 – w3)

This is implemented in ScoringModel.extract_thresholds() (though there is some additional logic there explained below).

Step 4: Ordering the categories

But how do we know what is the right order of the categories? Clearly we have a preferred order (home → hospital → death), but what will the model say?

It is worth noting a couple of things about the lines that represent which category wins at each score. As we are interested in whichever line is the highest, we are talking about the boundary of the region that is above all lines:

Since this area is the intersection of all half-planes that are above each line, it is necessarily convex. (Note that no line can be vertical.) This means that each category wins over exactly one range of scores; it cannot get back to the top again later.

It also means that these ranges are necessarily in the order of the slopes of the lines, which are the weights. The biases influence the values of the thresholds, but not the order. We first have negative slopes, followed by small and then big positive slopes.

This is because given any two lines, towards negative infinity the one with the smaller slope (weight) will win, and towards positive infinity, the other. Algebraically speaking, given two lines

f1(x) = w1*x + b1 and f2(x) = w2*x + b2 where w2 > w1,

we already know they intersect at (b2 – b1) / (w1 – w2), and below this, if x < (b2 – b1) / (w1 – w2), then

(w1 – w2)x > b2 – b1 (w1 – w2 is negative!)

w1*x + b1 > w2*x – b2

f1(x) > f2(x),

and so f1 wins. The same argument holds in the other direction.

Step 4.5: We messed up (propagate-sum)

And here lies a problem: the scoring model is more or less free to decide what order to put the categories in. That’s not good: a score that predicts death at 0, home treatment at 10, and hospitalization at 20 is clearly nonsensical. However, with certain inputs (especially if one feature dominates a category) this can happen even with extremely simple scoring models like a linear regression.

There is a way to protect against this though. Keras allows adding a kernel constraint to a dense layer to force all weights to be non-negative. We could take this code and implement a kernel constraint that forces the weights to be in increasing order (w1 ≤ w2 ≤ w3), but it is simpler if we stick to the available tools. Fortunately, Keras tensors support slicing and concatenation, so we can split the outputs of the dense layer into components (say, d1, d2, d3) and use the following as the input into the softmax:

- o1 = d1

- o2 = d1 + d2

- o3 = d1 + d2 + d3

In the code, this is referred to as “propagate sum.”

Substituting the weights and biases into the above we get

- o1 = w1*score + b1

- o2 = (w1+w2)*score + b1+b2

- o3 = (w1+w2+w3)*score + b1+b2+b3

Since w1, w2, w3 are all non-negative, we have now ensured that the effective weights used to decide the winning category are in increasing order.

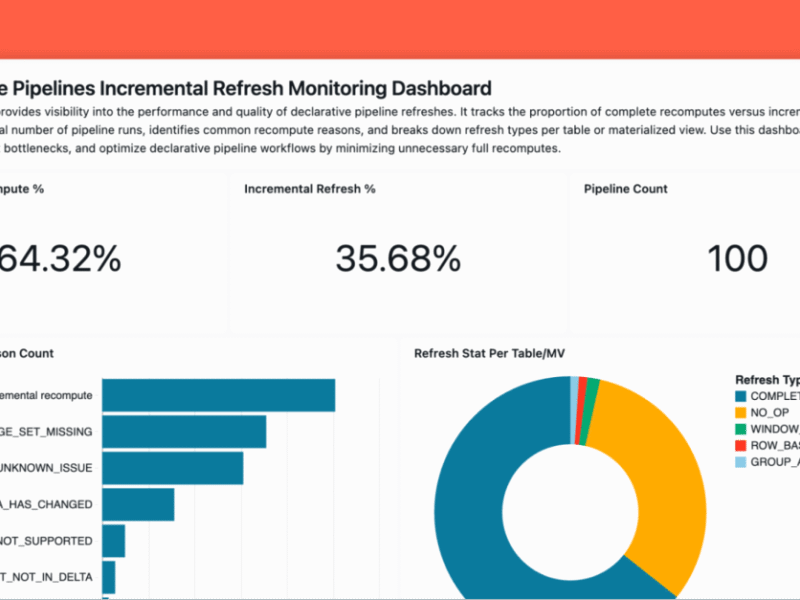

Step 5: Training and evaluating

All the components are now together to train the linear regression. The model is implemented in ScoringModel.build_linear_bottleneck_model() and can be trained by running train.py --linear-bottleneck. The code also automatically extracts the thresholds and the weights of the linear combination after each epoch. Note that as a final calculation, we need to shift each threshold by the bias in the encoder layer.

Epoch #4 finished. Logs: {'accuracy': 0.7988250255584717, 'loss': 0.4569114148616791, 'val_accuracy': 0.7993124723434448, 'val_loss': 0.4509878158569336}

----- Evaluating the bottleneck model -----

Prev infection A weight: -0.22322197258472443

Prev infection B weight: -0.1420486718416214

Acute infection B weight: 0.43141448497772217

Cancer diagnosis weight: 0.48094701766967773

Weight deviation weight: 1.1893583536148071

Age weight: 1.4411307573318481

Blood pressure dev weight: 0.8644841313362122

Smoked years weight: 1.1094108819961548

Threshold: -1.754680637036648

Threshold: 0.2920824065597968The linear regression can approximate the toy example with an accuracy of 80%, which is pretty good. Naturally, the maximum achievable accuracy depends on whether the system to be modeled is close to linear or not. If not, one can consider using a more capable network as the encoder; for example, a few dense layers with nonlinear activations. The network should still not have enough capacity to condense the projected score too much.

It is also worth noting that with the linear combination, the dimensionality of the weight space the training happens in is minuscule compared to regular neural networks (just N where N is the number of input features, compared to millions, billions or more). There is a frequently described intuition that on high-dimensional error surfaces, genuine local minima and maxima are very rare – there is almost always a direction in which training can continue to reduce loss. That is, most areas of zero gradient are saddle points. We do not have this luxury in our 8-dimensional weight space, and indeed, training can get stuck in local extrema even with optimizers like Adam. Training is extremely fast though, and running multiple training sessions can solve this problem.

To illustrate how the learnt linear model functions, ScoringModel.try_linear_model() tries it on a set of random inputs. In the output, the target and predicted outcomes are noted by their index number (0: treatment at home, 1: hospitalized, 2: death):

Sample #0: target=1 score=-1.18 predicted=1 ok

Sample #1: target=2 score=+4.57 predicted=2 ok

Sample #2: target=0 score=-1.47 predicted=1 x

Sample #3: target=2 score=+0.89 predicted=2 ok

Sample #4: target=0 score=-5.68 predicted=0 ok

Sample #5: target=2 score=+4.01 predicted=2 ok

Sample #6: target=2 score=+1.65 predicted=2 ok

Sample #7: target=2 score=+4.63 predicted=2 ok

Sample #8: target=2 score=+7.33 predicted=2 ok

Sample #9: target=2 score=+0.57 predicted=2 okAnd ScoringModel.visualize_linear_model() generates a histogram of the score from a batch of random inputs. As above, “.” notes home treatment, “o” stands for hospitalization, and “+” death. For example:

+

+

+

+ +

+ +

. o + + + +

.. .. . o oo ooo o+ + + ++ + + + +

.. .. . o oo ooo o+ + + ++ + + + +

.. .. . . .... . o oo oooooo+ ++ + ++ + + + + + + +

.. .. . . .... . o oo oooooo+ ++ + ++ + + + + + + +The histogram is spiky due to the boolean inputs, which (before normalization) are either 0 or 1 in the linear combination, but the overall histogram is still much smoother than the results we got with the 2-layer neural network above. Many input vectors are mapped to scores that are at the thresholds between the outcomes, allowing us to predict if a patient is dangerously close to getting hospitalized, or should be admitted to intensive care as a precaution.

Conclusion

Simple models like linear regressions and other low-capacity networks have desirable properties in a number of applications. They are highly interpretable and verifiable by humans – for example, from the results of the toy example above we can clearly see that previous infections protect patients from worse outcomes, and that age is the most important factor in determining the severity of an ongoing infection.

Another property of linear regressions is that their output moves roughly in line with their inputs. It is this feature that we used to acquire a relatively smooth, continuous score from just a few anchor points offered by the limited information available in the training data. Moreover, we did so based on well-known network components available in major frameworks including Keras. Finally, we used a bit of math to extract the information we need from the trainable parameters in the model, and to ensure that the score learnt is meaningful, that is, that it covers the outcomes (categories) in the desired order.

Small, low-capacity models are still powerful tools to solve the right problems. With quick and cheap training, they can also be implemented, tested and iterated over extremely quickly, fitting nicely into agile approaches to development and engineering.