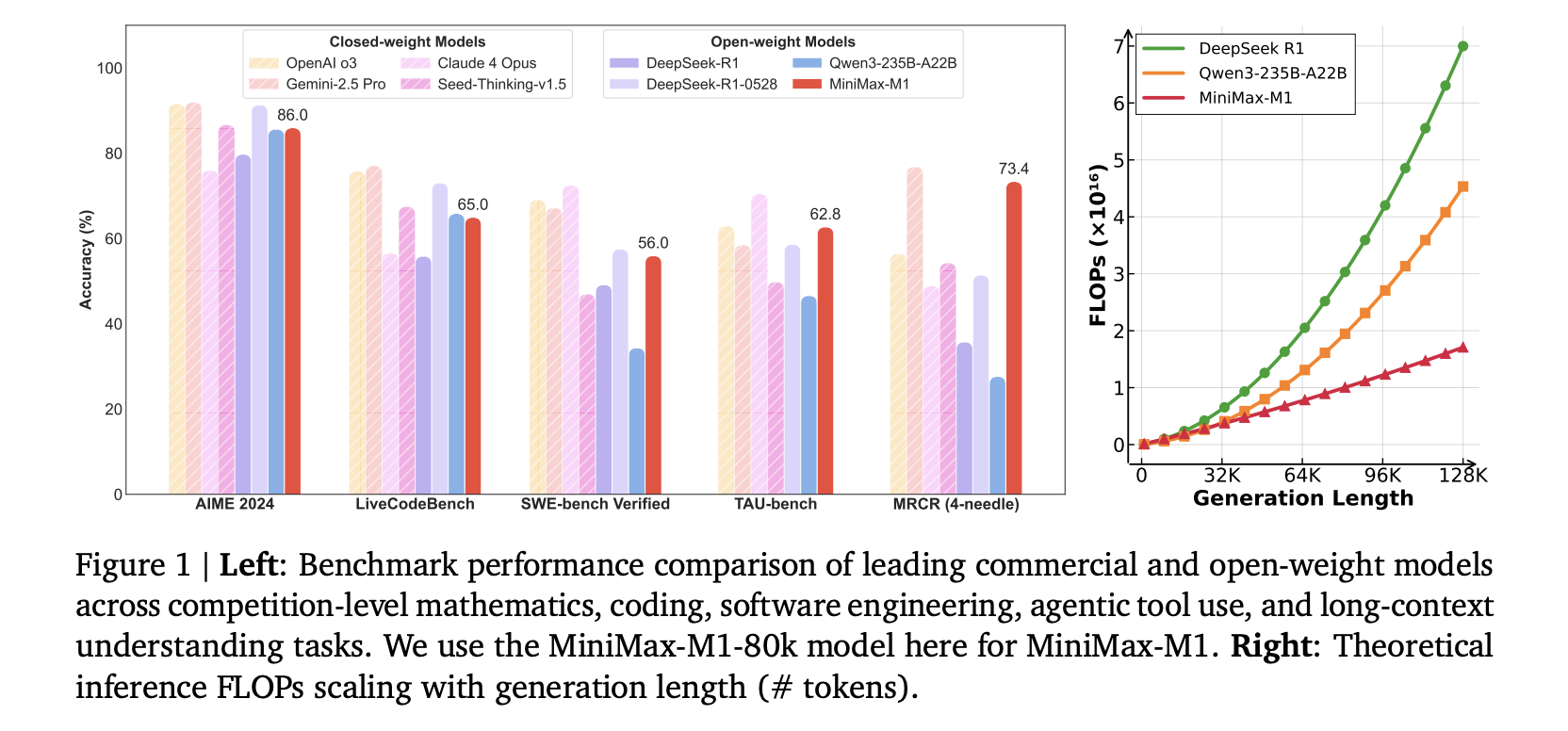

The Challenge of Long-Context Reasoning in AI Models Large reasoning models are not only designed to understand language but are also structured to think through

Author:

to lead the cloud industry with a whopping 32% share due to its early market entry, robust technology and comprehensive service offerings. However, many users

isn’t yet another explanation of the chain rule. It’s a tour through the bizarre side of autograd — where gradients serve physics, not just weights

Boston – June 19, 2025 – Ataccama announced the release of a report by Business Application Research Center (BARC), “The Rising Imperative for Data Observability,”

Image by Editor | Midjourney While Python-based tools like Streamlit are popular for creating data dashboards, Excel remains one of the most accessible and

Image by Author | Ideogram You’re architecting a new data pipeline or starting an analytics project, and you’re probably considering whether to use Python

Image by Author | ChatGPT Web development remains one of the most popular and in-demand professions, and it will continue to thrive even in

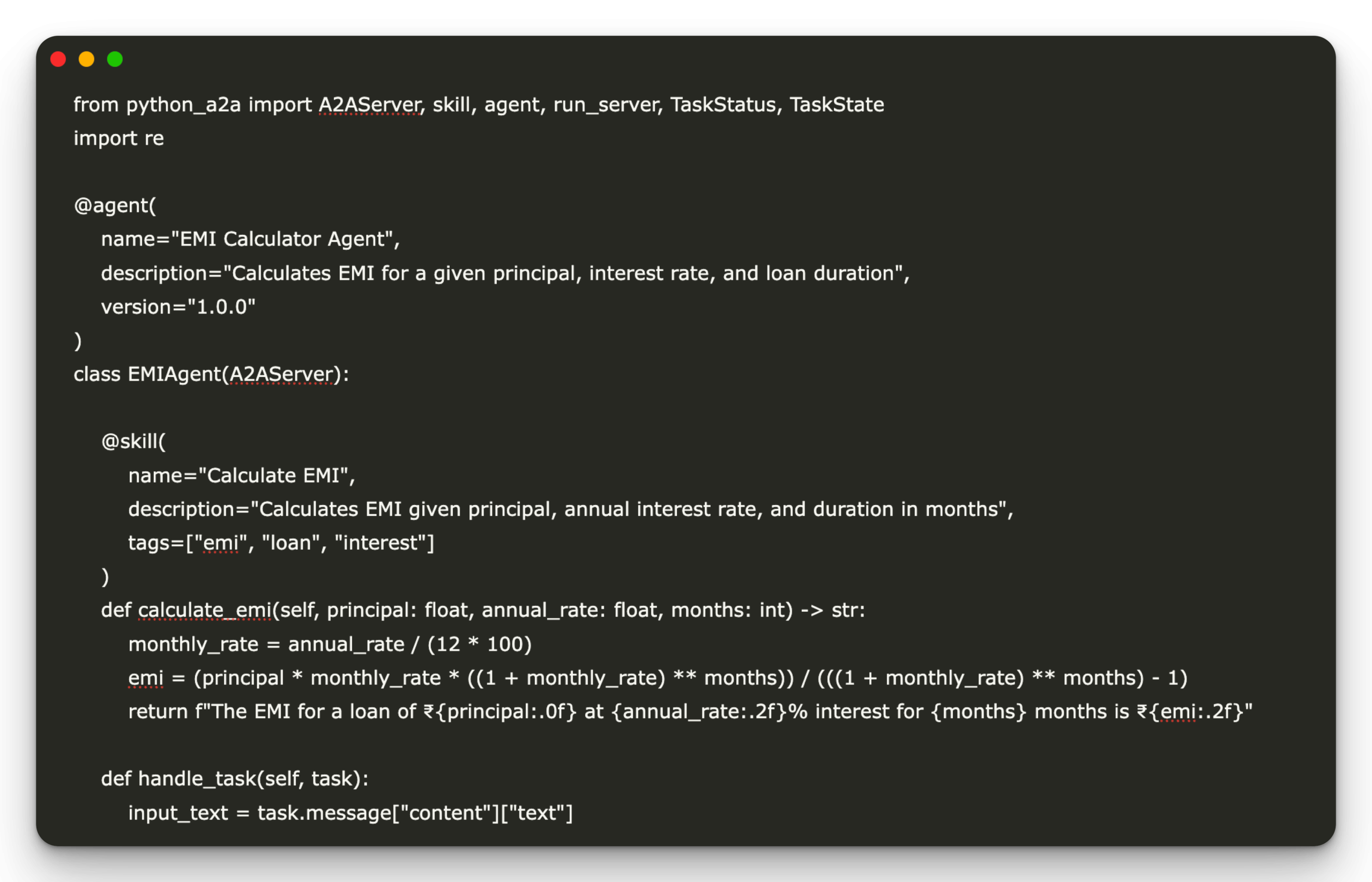

Python A2A is an implementation of Google’s Agent-to-Agent (A2A) protocol, which enables AI agents to communicate with each other using a shared, standardized format—eliminating the

The Challenge of Fine-Tuning Large Transformer Models Self-attention enables transformer models to capture long-range dependencies in text, which is crucial for comprehending complex language patterns.

In this tutorial, we delve into building an advanced data analytics pipeline using Polars, a lightning-fast DataFrame library designed for optimal performance and scalability. Our