“Manufacturing is the engine of society, and it is the backbone of robust, resilient economies,” says John Hart, head of MIT’s Department of Mechanical Engineering

Category: Updates

Is this movie review a rave or a pan? Is this news story about business or technology? Is this online chatbot conversation veering off into

In today’s competitive business landscape, creating compelling presentations that capture and maintain audience attention is more critical than ever. Traditional static slides often fail to

There’s a strange kind of vulnerability that comes with hitting “submit” on an essay these days. You’re not just worried about grammar slips or whether

When I first heard Grok Imagine had a “Spicy Mode,” I chuckled—you know, because it screams Elon Musk-level flair for controversy. But then the realization

In the rapidly evolving field of agentic AI and AI Agents, staying informed is essential. Here’s a comprehensive, up-to-date list of the Top 10 AI

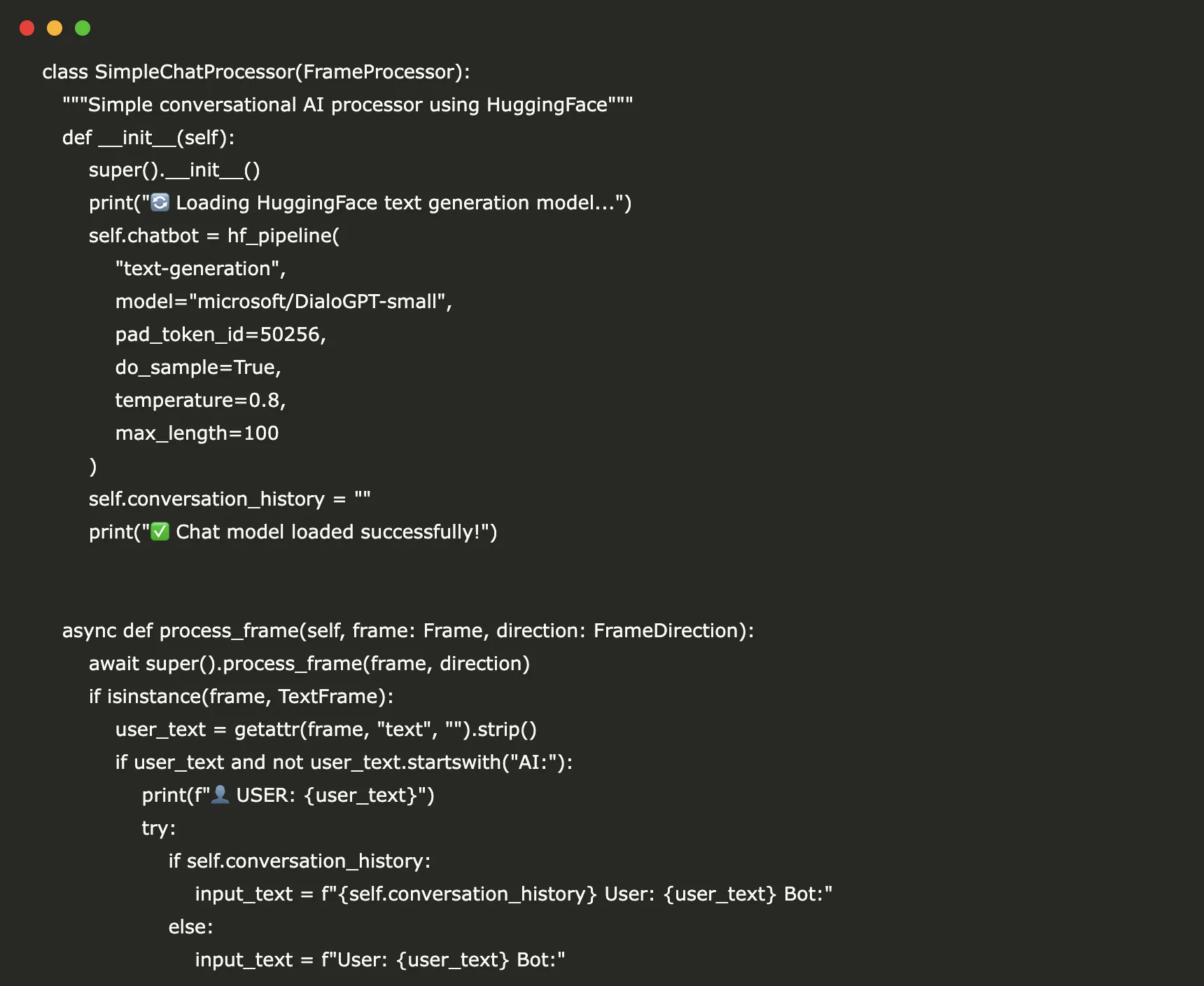

In this tutorial, we explore how we can build a fully functional conversational AI agent from scratch using the Pipecat framework. We walk through setting

In the rapidly evolving field of agentic AI and AI Agents, staying informed is essential. Here’s a comprehensive, up-to-date list of the top blogs and

Artificial intelligence and machine learning workflows are notoriously complex, involving fast-changing code, heterogeneous dependencies, and the need for rigorously repeatable results. By approaching the problem

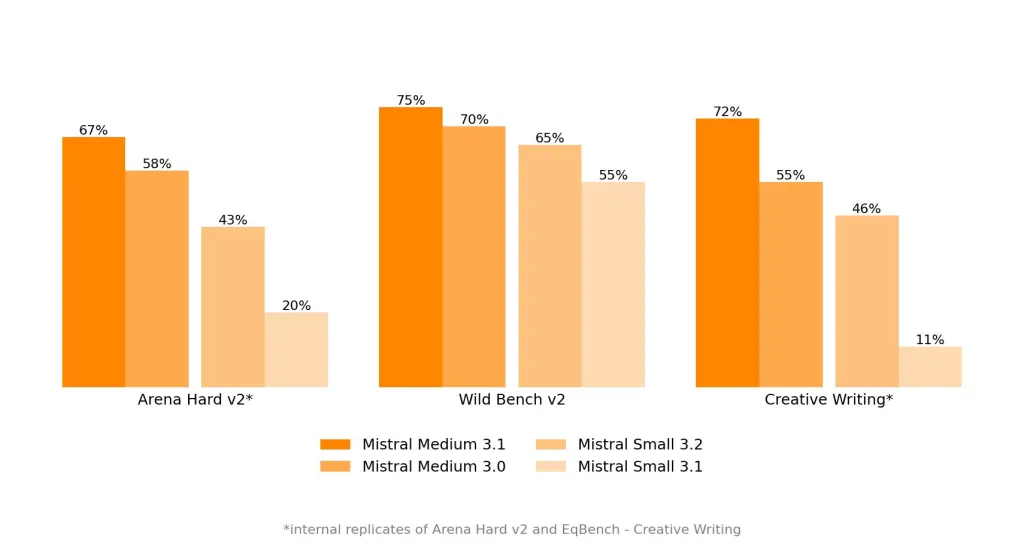

Mistral AI has introduced Mistral Medium 3.1, setting new standards in multimodal intelligence, enterprise readiness, and cost-efficiency for large language models (LLMs). Building on its