The Challenge of Fine-Tuning Large Transformer Models Self-attention enables transformer models to capture long-range dependencies in text, which is crucial for comprehending complex language patterns.

Category: Updates

In this tutorial, we delve into building an advanced data analytics pipeline using Polars, a lightning-fast DataFrame library designed for optimal performance and scalability. Our

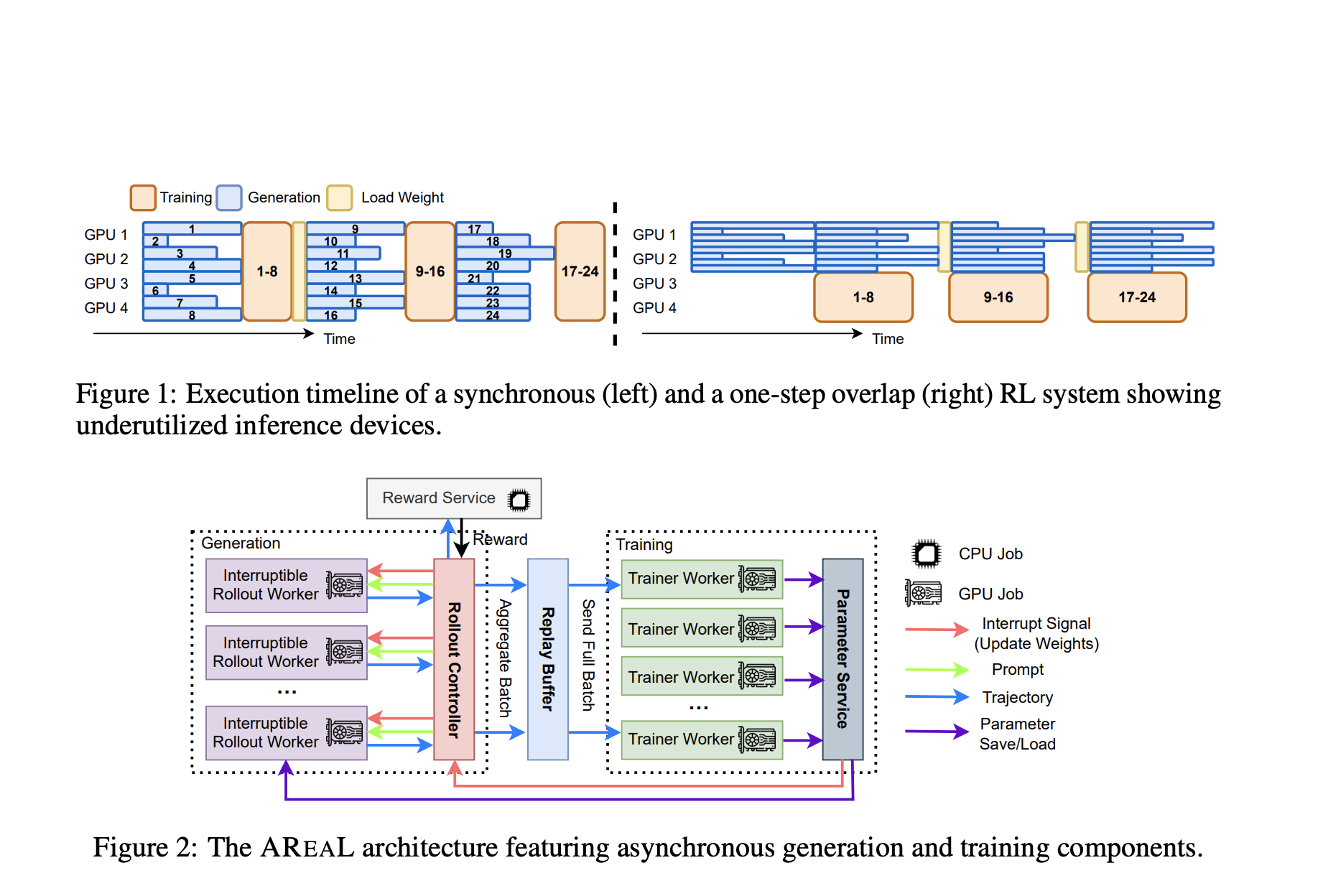

Introduction: The Need for Efficient RL in LRMs Reinforcement Learning RL is increasingly used to enhance LLMs, especially for reasoning tasks. These models, known as

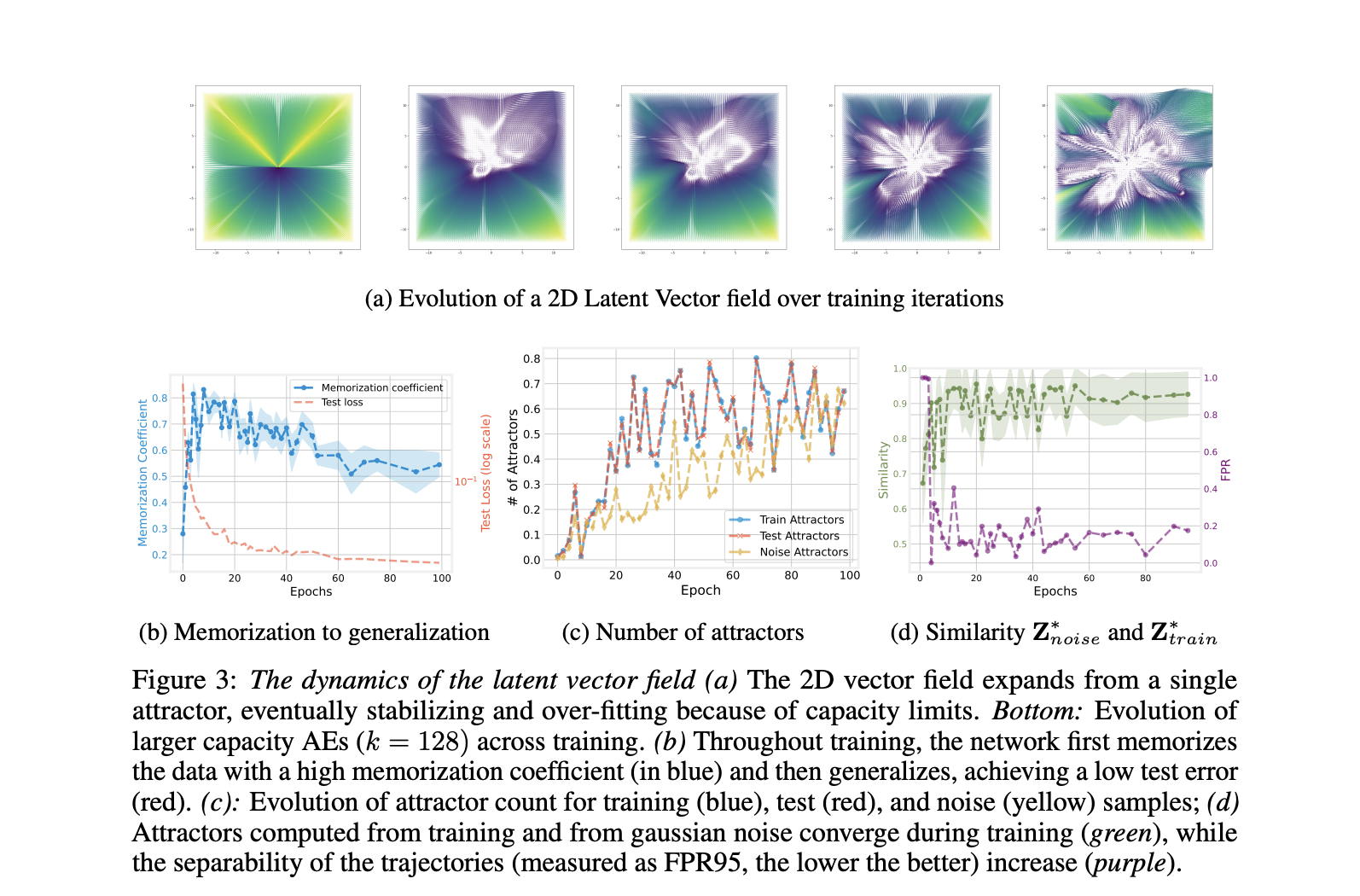

Autoencoders and the Latent Space Neural networks are designed to learn compressed representations of high-dimensional data, and autoencoders (AEs) are a widely-used example of such

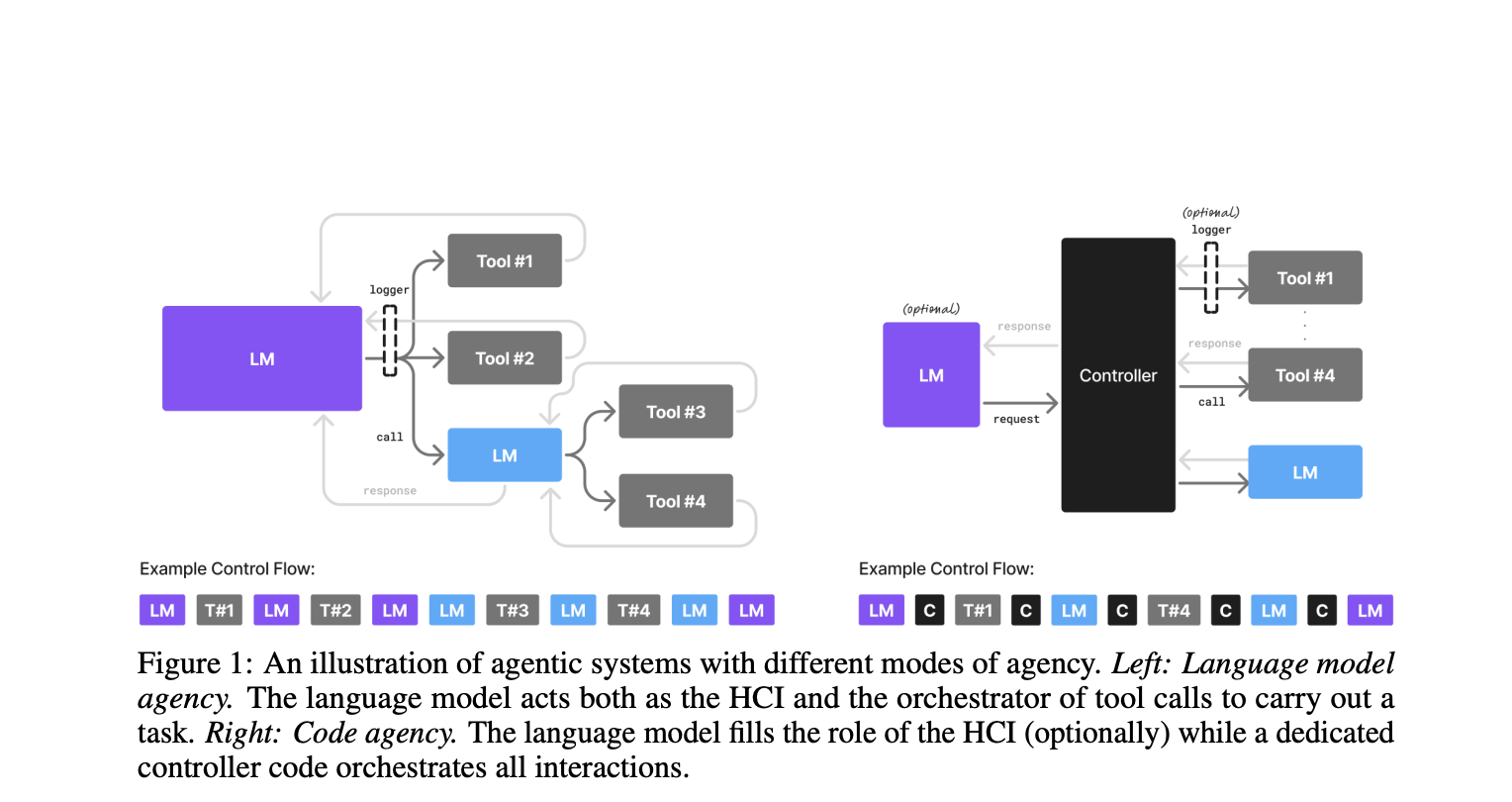

The Shift in Agentic AI System Needs LLMs are widely admired for their human-like capabilities and conversational skills. However, with the rapid growth of agentic

In this tutorial, we walk you through building an enhanced web scraping tool that leverages BrightData’s powerful proxy network alongside Google’s Gemini API for intelligent

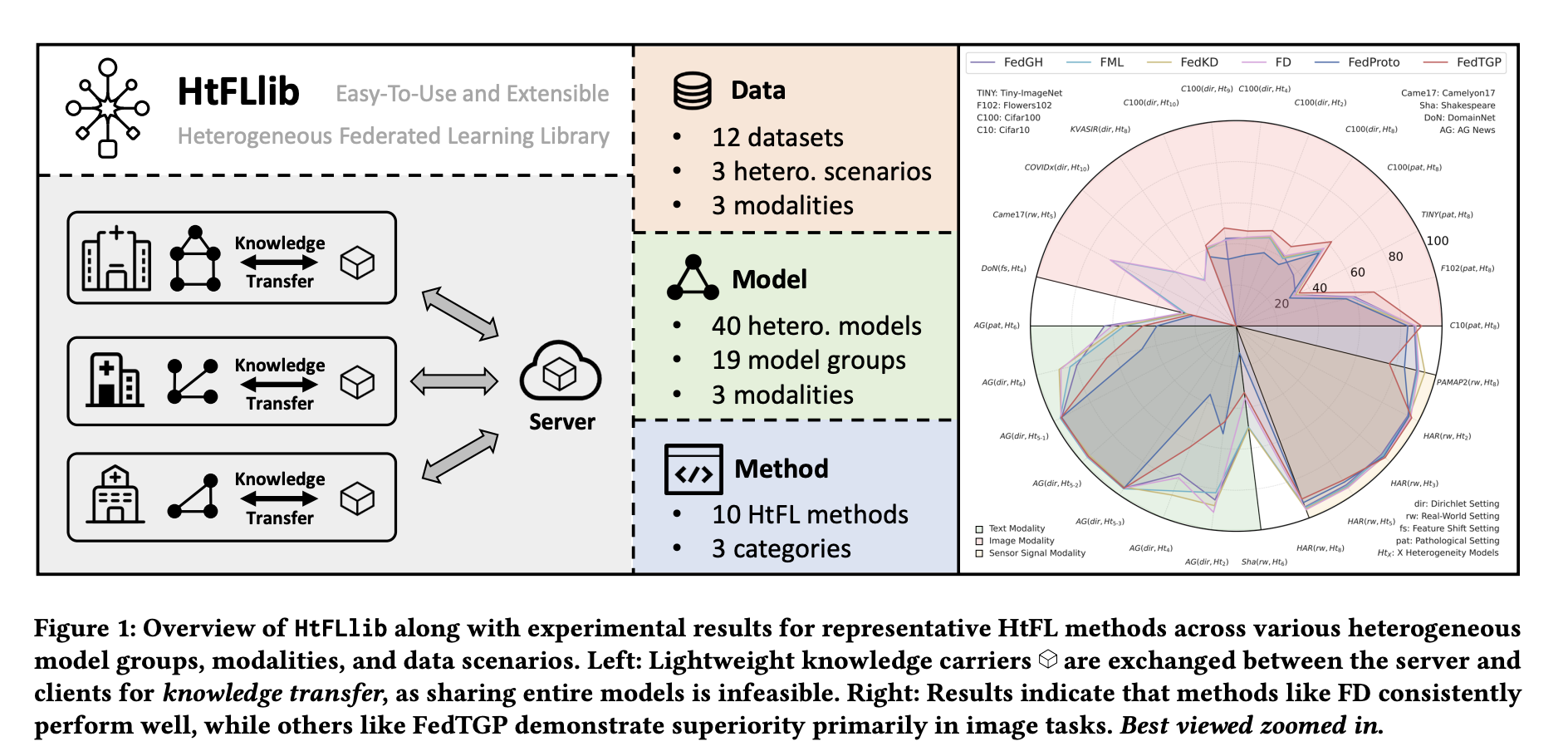

AI institutions develop heterogeneous models for specific tasks but face data scarcity challenges during training. Traditional Federated Learning (FL) supports only homogeneous model collaboration, which

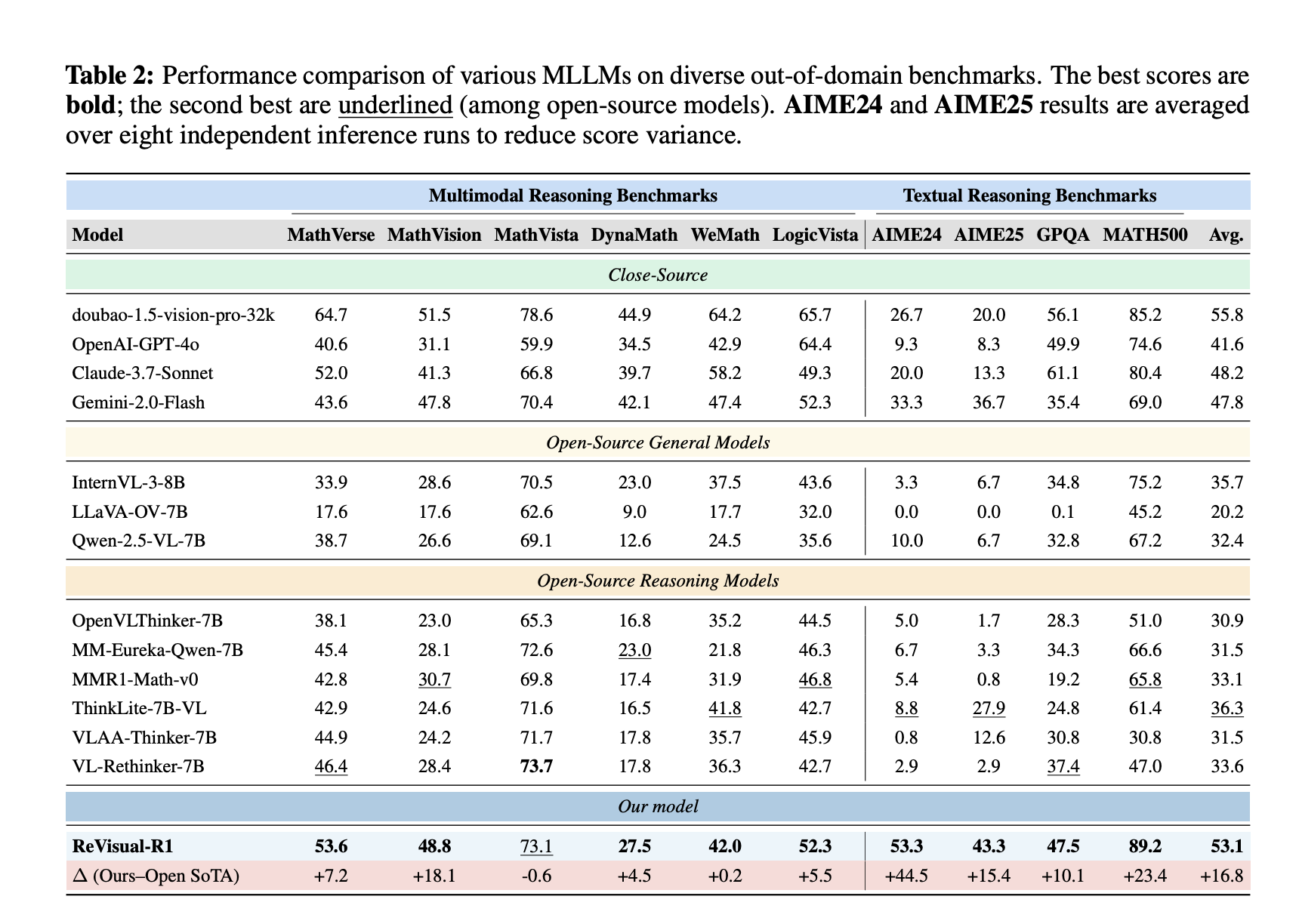

The Challenge of Multimodal Reasoning Recent breakthroughs in text-based language models, such as DeepSeek-R1, have demonstrated that RL can aid in developing strong reasoning skills.

OpenAI has open-sourced a new multi-agent customer service demo on GitHub, showcasing how to build domain-specialized AI agents using its Agents SDK. This project—titled openai-cs-agents-demo—models

AI Girlfriend Chatbots and Language Learning: A New Tool for Practicing Conversations AI technologies have transformed how we interact with the digital world, and AI